Table of Contents

ToggleIntroduction

Amazon is the world’s largest e-commerce company as well as a significant technological enterprise. Amazon has an ever-growing presence in the digital sphere, whether it is through its dynamic e-commerce platform, intelligent virtual assistant Alexa, or the dependable cloud computing service AWS, and is constantly in need of data scientists who can stretch the company’s growth horizons through ingenious data-driven decisions.

There are 4 main Types of Amazon Data Scientist:

- Business Intelligence/Data Analytics

This position is primarily responsible for forecasting, finding strategic opportunities, and offering educated and business-related insights. Data visualization tools such as Tableau, as well as data warehousing expertise, are frequently required.

- Researchers in Machine Learning

This position is primarily concerned with cutting-edge research in fields such as NLP, deep learning, video recommendations, streaming data analysis, social networks, and so on. In general, positions range from PhDs to internationally famous researchers.

- Data Scientists/Applied Scientists

The data scientist is the most common and broad role, delving into large data sets to construct simulation and experimentation systems at scale, optimise algorithms, and use cutting-edge technology throughout Amazon.

- Data Engineer

This is the group that creates tools or products that are used both within and outside of the firm. Consider AWS or Alexa. The role is quite similar to that of an ML Engineer. Object-oriented programming languages such as C++/Java are frequently required.

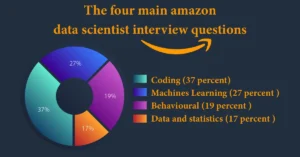

The 4 main Amazon Data Scientist Interview Questions:

- Coding (37 percent)

- Machines Learning (27 percent )

- Behavioural (19 percent )

- Data and statistics (17 percent )

Amazon data scientists work in a variety of departments, including Amazon Web Services, Alexa, SCOT, logistics, and others. Because each function has unique duties, the interview questions you receive will reflect this. The Glassdoor data we utilised is generalised across all data scientist roles, so examine your recruiter’s preparation materials to see which areas will be most relevant for your position.

Amazon Data Scientist Interview Questions- Behavioral

Amazon uses its leadership principles every day, whether we’re discussing ideas for new projects or deciding on the best approach to solving a problem. It is just one of the things that makes Amazon unique. All candidates are evaluated based on their leadership principles. The best way to prepare for your interview is to consider how you’ve applied the Leadership Principles in your previous professional experience.

Amazon’s management principles:

- Ownership of a Customer Obsession

- Have Backbone in Action; Disagree and Commit

- Innovate and simplify

- Dive Deep Is Correct, A Lot Produces Results

- Hire Big and Develop the Most Frugality

- Insist on the highest standards by learning and being curious.

- Earn Trust Strive to be the

- Best Employer on the Planet

- Success and Scale Bring Significant Responsibility

STAR method for Amazon data Scientist Interview Questions

According to Jeff Bezos’ ‘Bias for Action’ leadership concept, “speed counts in business.”

This mindset pervades their interviews as well.

Your interviewer will not enjoy rambling narratives that jump all over the place. They expect you to be straightforward and succinct while exhibiting depth of understanding and clarity of ideas.

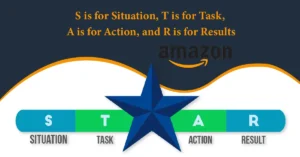

That’s why Amazon is open about its support for something called the STAR technique.

The STAR method is a strategy for answering these types of questions in a concise and comprehensive manner.

STAR is an abbreviation for:

S is for Situation, T is for Task, A is for Action, and R is for Results.

Assume your interviewer asks you to answer one of the most popular Amazon behavioral interview questions, “tell me about a moment when you had to make a decision to make short-term sacrifices for long-term rewards.”

You should use the STAR approach to get the most out of your answer and avoid lengthy or unclear answers.

- Describe the Situation

You’d start by quickly summarizing the situation that forced you to make such a decision.

What was the situation, how did you realize you needed to make the decision, and so on?

- What are your tasks?

Explain the duties that must be completed as a result of the decision to make short-term sacrifices.

Tasks are not specific actions, but rather broad notions.

- What was your course of action?

What precise steps did you take for the task, as opposed to tasks?

If your objective was to discover more about user behaviour, your efforts could include phone calls or questionnaires delivered to users via email.

- Distribute the Results

Finally, discuss the outcomes that resulted from your activities.

In this instance, you would prioritise long-term gains.

STAR Interview Question and Answer Examples

Here’s an example of an Exponent community member’s response to the question “Tell me about a time you made a mistake.”

Situation: Let me tell you about a time when a website I controlled began to perform slowly. Our error was that the problem went unnoticed until a user reported it to management. As project manager, I accepted full responsibility for the situation and collaborated with the engineering team to immediately remedy it.

Tasks: This blunder showed me the value of concentrating on and monitoring non-functional needs. This is in addition to my regular focus on new feature development and adoption.

Action: After installing the rapid patch, I made certain that such an error did not occur again. I accomplished this by using a solid application management solution and configuring it to receive email warnings as well as Pagerduty alerts when website activity exceeds predefined thresholds/SLAs.

I made the effort to master the tool myself in order to better investigate previous difficulties and identify optimization areas for engineering.

As a result of that effort, we are able to demonstrate consistent page load times of less than 3 seconds. In a brown bag session, I also shared my learnings with the other PMs on my team so that they may benefit as well.

Using this strategy, your interviewer will discover a lot about you in a short period of time. This response goes above and beyond what an HR manager may have included in the job description.

Behavioral interview questions are frequently focused towards prior circumstances that are difficult, nuanced, or require context to fully comprehend.

However, the STAR technique eliminates the need to worry about leaving anything crucial out or wasting the interviewer’s time with a lengthy response.

Frequently Asked Amazon Data Scientist Interview Questions

- Why does Amazon exist?

- Tell me about a moment when you messed up at work. What did you take away from it?

- Tell me about a problem you encountered. What was your role and what was the outcome?

- Tell me about a time when you had a disagreement with a coworker, manager, or decision.

- Tell me about a time when you had to work quickly or make a choice under pressure.

- Tell me about a moment when you had to alter your strategy because you were about to miss a deadline.

- Tell me about an instance when you had to make a quick judgement without access to data. What was the result, and would you do anything differently next time?

- Tell me about an instance when you implemented a feature that had recognized risks.

- Have you ever had a situation where the deadline for a project was earlier than expected? How did you handle it, and what was the outcome? Tell me about a time at work when you did something that wasn’t your responsibility or was not in your job description.

- Tell me about an instance when you went above and beyond your work responsibilities to assist the firm.

- Tell me about a time when you had to make a big choice without consulting your supervisor.

- How would you improve Amazon.com?

- Tell me about one of your projects in which you prioritized the customer.

- Tell me about a moment when you had to deal with an obstinate customer.

- Who has the best customer service, and why?

Beginner Amazon Data Scientist Interview Questions

Here are a few fundamental Amazon interview questions for data scientists that will be useful to beginners and freshers:

- Describe Data Science.

Solution:

Data Science is a collection of tools, methods, and Machine Learning principles. The primary purpose is to uncover hidden patterns in raw data.

Q2. Explain the difference between supervised and unsupervised learning.

Solution:

Learning Under Supervision:

- The input data has been labelled.

- It makes use of a training data set.

- This makes categorization and regression possible.

- It is used for forecasting.

Learning Without Supervision:

- There is no labelling on the input data.

- It makes use of an input data set.

- Classification, density estimation, and dimension reduction are all possible.

- It is used for research.

Q3. What are the different kinds of selection bias?

Solution:

The four types of selection bias are as follows:

- Sampling Bias

- Time interval

- Data

- Attrition

Q4 What is the purpose of using a summary function?

Solution:

This function is used to summarize all of the numerical data in the data frame. The describe() function, for example, can summarize all data values. columnname.describe() will display the values of every numeric data in the column-

- Count

- Mean

- Standard Deviation

- Minimum

- 25%

- 50%

- 75%

- Maximum

Q5. What is the significance of R in data visualization?

Solution:

Because of its built-in functions and libraries, R is utilized in data visualization. There are ggplot2, leaflet, and lattice in these libraries. R is also useful for exploratory data analysis and feature engineering.

Additional Amazon Data Scientist Interview Questions for New Graduates

- Tell us about Amazon Web Services.

- Was there ever a time when you had to deal with uncertainty?

- Have you ever worked as part of a group?

- On what project did you work?

- What are your time management strategies?

- What exactly is a confusion matrix?

- Explain Markov Chains.

- What is the difference between a true positive rate and a false positive rate?

- What exactly is dimension reduction?

- How are the RMSE and MSE linear regression models discovered?

Amazon Data Scientist Interview Questions: Machine Learning

Q1. What exactly is Machine Learning?

Solution:

The study and development of algorithms is known as machine learning. It is quite similar to computational statistics. It is used to create complex models and algorithms that aid in commercial prediction through predictive analysis.

Q2. Define “Naive” in the context of Naive Bayes.

Solution:

The Bayes theorem underpins this algorithm. It describes the likelihood of an event based on prior knowledge of the conditions around that event.

Q3. What exactly is pruning?

Solution:

Pruning is a Machine Learning and search algorithm strategy for reducing the size of decision trees. It prunes branches of the tree that have minimal power to classify cases. Pruning refers to the removal of sub-nodes from a decision node.

Q4. What exactly is Linear Regression?

Solution:

This is a statistical strategy that predicts the score of Y based on the second variable, X. In this case, X is the predictor variable and Y is the criteria variable.

Q5. Describe the disadvantages of a linear model.

Solution

The linear model has the following drawbacks:

- The assumption of error linearity.

- It is ineffective for binary or count results.

- It is unable to address the overfitting issue.

Q6. What is the bias-variance trade-off?

Solution:

Bias: A bias is a mistake introduced in your model as a result of the machine learning algorithm being oversimplified. It may result in underfitting. When you train your model, it makes simplified assumptions in order to make the goal function clearer to understand.

Machine learning algorithms with low bias – Decision Trees, k-NN, and SVM Machine learning methods with high bias — Linear Regression, Logistic Regression

Variance is an error created in your model as a result of the sophisticated machine learning technique; your model learns noise from the training data set and performs poorly on the test data set. It can result in overfitting and high sensitivity.

Typically, as the complexity of your model increases, you will see a drop in error due to decreasing bias in the model. However, this only lasts until a certain point. As you continue to make your model more sophisticated, you wind up over-fitting it, and your model suffers from high variance.

Additional Amazon Data Scientist Interview Questions Machine Learning

- Explain the Decision Tree Algorithm briefly.

- What is the difference between entropy and information acquired in the Decision Tree Algorithm?

- Distinguish between regression and classification machine learning approaches.

- What exactly are Recommender Systems?

- How should outlier values be handled?

- What are the different stages of an analytics project?

- How do you handle missing values during analysis?

- In a clustering algorithm, how will you define the number of clusters?

- Explain Ensemble learning.

- How do you create a random forest?

Amazon Data Scientist Interview Questions : Coding

Q1. What Exactly Is Python?

Solution:

Python, like PHP and Ruby, is an open-source interpreted language featuring autonomous memory management, exceptions, modules, objects, and threads.

Python’s advantages include its ease of use, portability, versatility, and built-in data structures. Because it is open-source, it has a large community behind it. Python excels at object-oriented programming. Because it is dynamically typed, you will not have to specify the types of variables when declaring them. It does not have access to public or private specifiers, unlike C++. Python functions are first-class objects that simplify challenging tasks. While you can write code rapidly, running it is slower than with other compiled programming languages.

Q2. What Python native data structures can you name?

Solution:

Python’s most common native data structures are as follows:

DictionariesListsSetsStringsTuples

Q3. Which datasets are mutable and which are immutable?

Solution:

ists, dictionaries, and sets can all be changed. This implies you can modify their content without altering their identification. Strings and tuples are immutable because their contents cannot be changed once they have been formed.

Q4. What Is the Difference Between a List and a Dictionary?

Solution:

In Python, a list and a dictionary are two distinct types of data structures. Lists are the most frequent data types with the most versatility. Lists can be used to store a sequence of mutable items (so they can be modified after they are created). They must, however, be stored in a specific sequence that can be indexed into the list or iterated over (and it can also take some time to apply iterations on list objects). As an example: >>> [1,2,3] a >>> 4 a[2] >>> [1, 2, 4] a A list cannot be used as a “key” for a dictionary in Python (technically you can hash the list first via your own custom hash functions and use that as a key).

In its most basic form, a Python dictionary is an unordered collection of key-value pairs. Because dictionaries are specialised for data retrieval, it’s an ideal tool for working with massive amounts of data (but you have to know the key to retrieve its value). It can also be described as a hashtable implementation and a key-value store. In this case, you can rapidly look up anything by its key, but because it’s unordered, keys must be hashes. Dictionaries are defined within curly braces in Python, with each item being a pair of the form key:value.

Q5. What Are the Typical Characteristics of Elements in a List and a Dictionary?

Solution:

Unless expressly sorted otherwise, elements in lists retain their ordering. They can be of any data type, have the same data type, or be mixed. List elements are always accessible using numeric, zero-based indices. Each entry in a dictionary will have a key and a value, but the order is not guaranteed. The dictionary’s elements can be accessed by using their key. Lists can be utilised whenever there is an orderly grouping of items. When you have a set of unique keys that map to values, you can use a dictionary.

Q6. Is It Possible to Get a List of All the Keys in a Dictionary?

Solution:

If so, how would you go about it?

If the interviewer follows up with this question, attempt to be as specific as possible in your response.

To get a list of all the keys in a dictionary, use the keys() function:

mydict={‘a’:1,’b’:2,’c’:3,’e’:5}

dict keys([‘a’, ‘b’, ‘c’, ‘e’]) mydict.keys()

Q7. Can you define list or dict comprehension?

Solution:

List comprehensions must be used when creating a new list from other iterables. When list comprehensions produce list results, they will be surrounded by brackets containing the expressions that must be run for each element. These, together with the loop, can be iterated over each element.

An example of basic syntax: new list = [expression for one or more loop conditions] When writing for loops in Python, list comprehensions can make things much easier because you can do so in a single line of code. Comprehensions, on the other hand, are not limited to lists. Dictionaries, which are commonly employed in data science, can also do comprehension.

If you have to take a practical test to demonstrate your knowledge and skills, remember that a Python list is denoted by square brackets [].

Curly braces, on the other hand, will symbolise a dictionary. Determining dict comprehension follows the same principle and is defined with similar syntax, but the expression must include a key:value pair.

Q8. In Python, when would you use a List, a Tuple, or a Set?

Solution:

A list is a common and versatile data type. It can hold a succession of mutable objects, making it excellent for applications that require the storing of items that can be altered later. In Python, a tuple is comparable to a list, but the main difference is that tuples are immutable. They also take up less space than lists and can only be used as a dictionary key. Tuples are an excellent choice for a collection of constants. Sets are a collection of distinct elements used in Python.

When you want to avoid duplicate components in your list, sets are a smart option. This means that whenever you have two lists with entries in common, you may use sets to delete them.

Q9. What Is the Difference Between a For Loop and a While Loop?

Solution:

A loop in Python iterates over common data types (such as dictionaries, lists, or strings) while the condition is true. When the condition is false, the programme control will be passed to the line immediately following the loop. In this case, it’s not a matter of preference, but of what your data structures are. For the Loop The most popular sort of loop in Python (and nearly any other programming language) is the For Loop. The For Loop is frequently used to iterate through the elements of an array.

For instance, for i=0, N Elements (array) do… For Loop can also be used to conduct a fixed number of iterations with a provided (positive or negative) increment. It is vital to note that the increment will always be one by default. Loop While While Loop in Python can be used to run an infinite number of iterations as long as the condition is true. For example:While (condition) is in effect… You must explicitly supply a counter when using the While Loop to keep track of how many times the loop was executed.

While Loop, on the other hand, cannot specify its own variable. Instead, it must be pre-defined and will persist even after you quit the loop. While Loop is inefficient when compared to For Loop because it is substantially slower. This is due to the fact that the condition is checked after each iteration. However, if you need to run one or more conditional checks in a For Loop, you should consider utilising a While Loop instead (since these checks will be unnecessary).

Here are some of the most often requested Amazon data scientist interview code questions:

- Explain JOINs and SQL.

- What is the most complex query you’ve ever created?

- Is there any SQL code that can explain the month-to-month user retention?

- You are given a list of integers and must locate a certain element. Which algorithm are you going to use?

- If you have a large and short sorted list, which algorithm would you use to search a lengthy list for the four elements?

- Create a Python function that returns the first N Fibonacci numbers.

- What steps are involved in improving a low-precision classification model?

- You are given monthly time-series data with huge data records. What differences will you notice between this month and the prior month?

- How do you look for missing data?

- When is missing data inspection necessary?

Amazon Data Scientist Interview Questions : Deep Learning

Q1. What is the distinction between Machine Learning and Deep Learning?

Solution:

In computer science, machine learning allows a computer to learn without explicit programming. It is divided into the following categories:

- Supervised Machine Learning

- Reinforcement Learning

- Unsupervised Machine Learning

Deep Learning makes use of a complicated set of algorithms that are modelled after the human brain. This enables the processing of unstructured data such as documents, photos, and text.

Q2. Why do you believe Deep Learning is becoming more popular?

Solution:

Deep Learning has been around for a long time, but advances using this technology have just lately occurred. This is due to:

It boosted the amount of data generated by various sources.

The increase in hardware resources required to execute various models.

Q3. Define reinforcement learning.

Solution:

This method teaches you what to do and how to translate situations into actions. Its goal is to boost the numerical reward signal. Interestingly, it is inspired by the human learning process and is based on the reward-penalty concept.

Q4. How does an Artificial Neural Network work?

Solution:

Artificial Neural Networks, like biological neural networks, operate on the same principles. It is made up of inputs that are processed.

Q5. Describe the Cost Function.

Solution:

The Cost Function is sometimes known as the “loss” or “error” function. It is a metric used to assess the model’s performance. It is used to compute the output layer’s error during backpropagation.

Deep Learning Interview Questions from Amazon Data Scientists

- What exactly is Deep Learning?

- Explain the foundations of neural networks.

- Explain Neural Networks.

- What exactly are Hyperparameters?

- In Deep Learning, distinguish between Epoch, Batch, and Iteration.

- What are the many layers of CNN?

- How does CNN’s Pooling work?

- Explain the operation of the LSTM network.

- Establish the Gradient Descent.

- What exactly are exploding gradients?

Amazon Data Scientist Interview Questions : Statistics

Q1. What is the Central Limit Theorem and why is it important?

Solution:

“Let us suppose we want to estimate the average height of all humans. It is difficult to collect data for every person on the planet. We can’t get height measurements from everyone in the population, but we can sample select people. The question now is, given a single sample, what can we say about the average height of the entire population? This is precisely addressed by the Central Limit Theorem.”

Q2. What exactly is sampling?How many different sampling techniques are there?

Solution:

“Data sampling is a statistical analysis approach that selects, manipulates, and analyses a representative selection of data points in order to find patterns and trends in the broader data set under consideration.” Sampling techniques are classified into two types: Sampling for Probability and Sampling with No Probability.

Q3. What is the distinction between types I and II errors?

Solution:

“A type I error happens when the null hypothesis is correct but is rejected.” A type II error arises when the null hypothesis is wrong but is not rejected correctly.”

Q4. What exactly is linear regression?

Solution:

A linear regression is a useful technique for performing quick predictive analysis: for example, the price of a house is affected by a variety of characteristics such as its size and location. To see the link between these variables, we must first construct a linear regression, which predicts the line of best fit between them and can help us determine whether these two variables have a positive or negative association.

Q5. What assumptions must be made for linear regression?

Solution:

Four major assumptions must be made: 1. There is a linear relationship between the dependent variables and the regressors, indicating that the model you’re developing matches the data. 2. The data errors or residuals are regularly distributed and independent of one another. 3. There is little multicollinearity among explanatory variables, and 4. Homoscedasticity. This signifies that the variance around the regression line is the same for all predictor variable values.

Q6. What exactly is a statistical interaction?

Solution:

“In essence, an interaction occurs when the effect of one factor (input variable) on the dependent variable (output variable) varies across levels of another component.”

Q7. What exactly is selection bias?

Solution:

“In a ‘active’ sense, selection (or’sampling’) bias occurs when the sample data acquired and prepared for modelling contains features that are not reflective of the genuine, future population of instances the model will observe.” Active selection bias occurs when a portion of data is systematically (i.e., not at random) removed from analysis.”

Q9. Describe a data set with a non-Gaussian distribution.

Solution:

“The Gaussian distribution is a member of the Exponential family of distributions, but there are many more of them with similar ease of use in many circumstances, and if the individual implementing the machine learning has a thorough basis in statistics, they can be used when appropriate.”

Q10. What exactly is the Binomial Probability Formula?

Solution:

“The binomial distribution is made up of the probabilities of each of the potential numbers of successes on N trials for independent events with a probability of (the Greek letter pi) of occuring.

The binomial distribution formula is as follows:b(x; n, P) = nCx * Px * (1 – P)n – x

Binomial probability = b

x equals the total number of “successes” (pass or fail, heads or tails, etc.)

P denotes the probability of success in an individual trial.

n denotes the number of tries.

Question ten: What exactly is statistical power?

According to Wikipedia, A binary hypothesis test’s statistical power or sensitivity is the likelihood that the test correctly rejects the null hypothesis (H0) when the alternative hypothesis (H1) is true.

In other words, statistical power is the likelihood that a study will discover an impact when it exists. The greater the statistical power, the less probable it is that you will commit a Type II error (concluding there is no effect when, in fact, there is).

Here are some of the most often requested Amazon data scientist interview statistics questions:

- What is the difference between point estimates and confidence intervals.

- Differentiate between univariate, bivariate, and multivariate analysis.

- Why do we generally use Softmax nonlinearity function as the last operation in-network?

- What is regularization?

- Why is it useful?

- How can you generate a random number between 1 – 7 with only a die?What is p-value?What is the goal of A/B Testing?

- Can you explain the difference between a Validation Set and a Test Set?

- Can you cite some examples where a false negative is more important than a false positive?

- What are Eigenvectors and Eigenvalues?

- What is Systematic Sampling?What is Cluster Sampling?

Amazon Data Scientist Interview Questions : Natural Language Process

Q1. What exactly is lemmatization?

Solution:

Lemmatization is the process of determining a word’s lemma. So, what exactly is a lemma? The root from which a word is produced is known as the lemma.

For example, if we lemmatize’studies’ and’studying,’ we get’study’ as the lemma. We arrived at this result after analysing the morphology of both words. These were mapped in a dictionary, which aided in the discovery of the lemma.

Q2. What exactly is stemming?

Solution:

The process of reducing inflected (or sometimes derived) words to their word stem, base, or root form—generally a written word form—is known as stemming. A stemming programme, stemming algorithm, or stemmer is a computer programme or subroutine that stems words.

Q3. What is the distinction between Lemmatizing and Stemming?

Solution:

The stemming technique simply considers the word’s form, whereas the lemmatization process considers the word’s meaning. That is, we will always get a valid word after applying lemmatization.

Most people believe that lemmatizing is similar to stemming. This is not the case. Lemmatization is a more comprehensive method for reducing a given string and extracting the core lemma. It is more difficult than stemming. It should be emphasised that stemming’studies’ results in’studi’ because it just removes the suffix without taking into account the linguistic morphology.

Q4. How does NER work?

Solution:

NER is the answer. Named – Entity Recognition is an abbreviation for Named – Entity Recognition. It is also known as entity chunking/extraction, which is a popular technique in information extraction for identifying and segmenting named things and classifying or categorising them into multiple predetermined classes.

Basic NER identifies and locates entities in structured and unstructured texts. For example, rather of distinguishing between “Steve” and “Jobs,” NER recognises “Steve Jobs” as a single entity. More advanced NER systems can also classify detected entities. In this scenario, NER not only recognises but also categorises “Steve Jobs” as a person.

Q5. What is the purpose of Feature Scaling?

Solution:

Feature Scaling or Standardization: This is a Data Pre Processing phase that is applied to independent variables or data features. It essentially aids in the normalisation of data within a specific range. It can also aid to speed up the calculations in an algorithm.

Q6 What is the meaning of TF-IDF?

Solution:

A term’s frequency (TF) and its inverse document frequency (IDF) are weighted in an information retrieval technique called TF*IDF (IDF). Each word or term has a unique TF and IDF score. The product of a term’s TF and IDF ratings is known as its TF*IDF weight.

Simply put, the greater the TF*IDF score (weight), the more uncommon the phrase, and vice versa.

Words with high TF-IDF values indicate a strong association with the document in which they appear, implying that if that term appears in a query, the document may be of interest to the user.

The weight Wt,d of a phrase t in document d is calculated as follows:

Wt,d = log (N/DFt) TFt,d

Where:

The number of occurrences of t in document d is given by TFt,d.

The number of documents containing the phrase t is denoted by DFt.

The total number of documents in the corpus is denoted by N.

Visit the link below for additional information.

https://www.onely.com/blog/what-is-tf-idf/

Q7: What exactly is a ngram in NLP?

Solution:

An N-gram is defined as a series of N words. “Medium blog,” for example, is a 2-gram (a bigram), “A Medium blog post” is a 4-gram, and “Write on Medium” is a 3-gram (trigram). An N-gram model, in essence, predicts the recurrence of a word based on the occurrence of its N – 1 preceding terms.

Let’s look at how to assign a probability to the next word in a word sequence. First and foremost, we require a big sample of English utterances (called a corpus).

For the sake of illustration, we’ll consider a relatively tiny sample of sentences, however a corpus will be enormously huge in reality. Assume our corpus includes the following sentences:

Thank you, he said.

As he stepped through the door, he said good-by.

He travelled to San Diego.

The weather in San Diego is pleasant.

It is raining in San Francisco. .

Assume we have a bigram model. So we’ll calculate a word’s likelihood based solely on its preceding word. Let’s look at how to assign a probability to the next word in a word sequence. First and foremost, we require a big sample of English utterances (called a corpus).

For the sake of illustration, we’ll consider a relatively tiny sample of sentences, however a corpus will be enormously huge in reality. Assume our corpus includes the following sentences:

Thank you, he said.

As he stepped through the door, he said good-by.

He travelled to San Diego.

The weather in San Diego is pleasant.

In San Francisco, it is raining.

Assume we have a bigram model. So we’ll calculate a word’s likelihood based solely on its preceding word. In general, this probability is calculated as (the number of times the prior word ‘wp’ occurs before the term ‘wn’) / (the total number of times the preceding word ‘wp’ occurs in the corpus) = (wp wn)/(wp)

Let’s use an example to illustrate this.

To calculate the likelihood of the word “you” after the phrase “thank,” we can use the conditional probability P (you | thank).

This is equivalent to:

=(number of times “Thank You” appears) / (number of times “Thank” appears) = 1/1 = 1

We can assert with certainty that whenever the word “Thank” appears, it will be followed by the word “You” (this is due to the fact that we trained on only five sentences, and “Thank” appeared only once in the context of “Thank You”).

https://blog.xrds.acm.org/2017/10/introduction-n-grams-required/

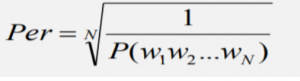

Q8. What exactly is Perplexed in NLP?

Solution:

The word “perplexed” implies “puzzled” or “confused,” therefore perplexity in general denotes the inability to deal with something complicated and an unspecified problem. As a result, Perplexity in NLP is a method of determining the degree of uncertainty in predicting some text.

Perplexity is a method of evaluating language models in NLP. Low perplexity is ethical since the inability to deal with any complicated situation is low, and excessive perplexity is bad because the failure to deal with a hard problem is considerable.

Points to consider:

- The average branching factor used to forecast the next word.

- Less is more (lower confusion = higher likelihood).

N = total amount of words NP

https://towardsdatascience.com/perplexity-intuition-and-deviation-105dd481c8f3

Q9. In NLP, what is Pragmatic Ambiguity?

Solution:

Ambiguity, as it is commonly employed in natural language processing, is defined as the ability to be understood in more than one way. In layman’s words, ambiguity is the ability to be understood in more than one way. Natural language is rife with ambiguity. The following ambiguities exist in NLP.

- Ambiguity in the lexicon

Lexical ambiguity refers to the ambiguity of a single word. Consider using the word silver as a noun, adjective, or verb.

- Ambiguity in Syntax

When a sentence is parsed in several ways, this type of ambiguity occurs. For example, “The man noticed the girl with the telescope.” It’s unclear if the man spotted the child with a telescope or through his telescope.

- Ambiguity in Semantics

When the meaning of the words themselves might be misconstrued, this type of ambiguity develops. Semantic ambiguity occurs when a statement contains an uncertain term or phrase. For example, the line “The car hit the pole while it was moving” has semantic ambiguity since it can be interpreted as “The car hit the pole while it was moving” or “The automobile hit the pole while the pole was moving.”

- Ambiguity in Anaphora

The usage of anaphora entities in language causes this type of ambiguity. For instance, the horse galloped up the hill. It was really steep. It quickly grew fatigued. The anaphoric reference of “it” causes ambiguity in two scenarios.

5.Pragmatic inconsistency

This type of ambiguity occurs when the context of a phrase allows for various interpretations. In layman’s terms, pragmatic ambiguity occurs when the statement is not specific. For example, the phrase “I like you too” might be interpreted in several ways: I like you (just like you like me), I like you (just like someone else does).

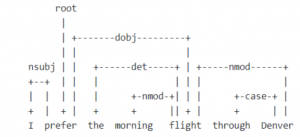

Q9. Describe Dependency Parsing.

Solution:

The task of extracting a dependency parse of a phrase that describes its grammatical structure and defines the links between “head” words and words that alter those heads is known as dependency parsing.

Example:

Above the phrase, directed, named arcs from heads to dependents (+ identifies the dependant) depict the word relationships.

Refernce:-https://medium.com/@5hirish/dependency-parsing-in-nlp-d7ade014186

Q10. What exactly is Pragmatic Analysis?

Solution:

Pragmatic Analysis is a step in the extraction of information from text. It’s the section that focuses on taking an organised piece of text and determining what the actual meaning was.

What is the significance of this? Because much of the meaning of a piece is determined by the context in which it was said/written. Ambiguity and reducing ambiguity are at the heart of natural language processing, therefore pragmatic analysis is vital when it comes to retrieving meaning or information.

Frequently Asked Questions

HirePro is a recruitment platform. HirePro is used for virtual screening of the candidates by providing various kinds of tasks. By which you can get a score or result for your assessment via your email.

Amazon’s interview procedure might be difficult. The good thing is that it is quite constant. Because we know the framework of the interview ahead of time, it makes preparation much easier and reduces surprises.

The on-site interview is divided into five rounds, each lasting one hour. You will begin with five minutes of introductions, followed by 50 minutes of interview time, followed by five minutes for any questions you may have for your interviewer.

Amazon is the second largest company with the most number of data workers. Amazon has more than 18 thousand data workers.

Amazon assigns the following years of experience to each level:

- Experience Level 4: 1-3 Years

- Level 5: Three to ten years of experience.

- Level 6: 8-10 years of experience is required.

- Level 7: 10 or more years of experience Even at this level, Amazon likes to promote from within and seldom hires outside talent.

By far the majority of your information is collected and stored by Google. This is hardly unexpected given that their business strategy is based on having as much data as possible (and making it super easy for you to access).

Every data scientist works with various kinds of data or product enhancements. It depends on the need of the hour that is crucial for product development. An Amazon data scientist may work on Alexa, AWS, Cloud computing, etc.

First you have to gain essential knowledge and the desired experience for about 10+ years. Then you can become a senior data scientist.