Machine learning and information science are actually the main technologies with regards to the procedures and products with automated learning and seo to be utilized in the motor vehicle market of the future. This report describes the terms information science (also called data analytics) as well as machine learning and the way they’re associated. Additionally, the phrase optimizing analytics are defined by it as well as illustrates the job of automatic seo as a major technology in conjunction with data analytics. Additionally, it uses examples to describe the way in which that these systems are presently used in the motor vehicle market on the foundation of the main subprocesses in the motor vehicle benefit chain (development, procurement; strategies, generation, advertising, after-sales and sales, connected customer). Because the business is just beginning to check out the wide range of prospective applications for these systems, visionary program examples are actually used to illustrate the groundbreaking chances that they provide. Lastly, the write demonstrates how these systems are able to make the motor vehicle industry much more effective and improve its customer emphasis throughout all its activities and operations, extending from the item as well as its development process to the buyers and the connection of theirs to the service.

1 Introduction

Information science and machine learning are important technologies in the everyday lives of ours, as we are able to see in a wide range of uses , like voice recognition in cars and on mobile phones, automatic facial as well as traffic sign recognition, and also chess and, much more lately, Go machine algorithms which people may no longer overcome. The evaluation of big data volumes based on learning algorithms, pattern recognition, and search provides insights into the actions of processes, methods, nature, and eventually folks, opening the doorstep to a planet of essentially new possibilities. In reality, the currently already implementable concept of autonomous driving is practically a concrete reality for many motorists nowadays with the assistance of lane keeping assistance as well as adaptive cruise control devices in the car.

The simple fact that this’s simply the idea of the iceberg, maybe even in the motor vehicle industry, becomes immediately evident when one thinks that, at the conclusion of 2015, Toyota as well as Tesla’s founder, Elon Musk, each one announced investments amounting to just one billion US money in artificial intelligence study as well as development nearly at the very same time. The trend towards attached, autonomous, moreover artificially intelligent methods which constantly learn from information and are actually capable to make optimum choices is actually advancing in tactics that are just groundbreaking, not to mention essentially vital to a lot of industries. This includes the motor vehicle industry, among the essential industries in Germany, within which international competitiveness is going to be affected by a new element in the future – namely the latest technical and service offerings which may be supplied with the help of information science as well as machine learning.

An overview of the corresponding approaches and even some present application examples in the motor vehicle industry are provided by this report. Additionally, it outlines the potential programs to be anticipated in this specific business very soon. Accordingly, sections two and three start by dealing with the subdomains of information mining (also called “big data analytics” artificial intelligence and), briefly summarizing the corresponding procedures, techniques, and aspects of application and showing them in context. Section four then presents an overview of current program examples in the motor vehicle industry depending on the phases inside the industry ‘s value chain from development to logistics and production through to the conclusion buyer. Based on such a good example, area five describes the perception for future uses using 3 examples: 1 in which automobiles play the job of autonomous elements that communicate with one another in cities, 1 that blankets incorporated output optimization, and one which describes businesses themselves as autonomous elements.

Whether these visions are going to become a reality in this or maybe any other way can’t be reported with certainty at existing – however, we are able to easily predict that the fast rate of improvement in this specific place will result in the development of entirely new items, procedures, and services, a lot of which we are able to just imagine today. This’s among the conclusions drawn in aisle six, together having an outlook about the possible future consequences of the fast rate of improvement in this specific place.

2 The data mining process

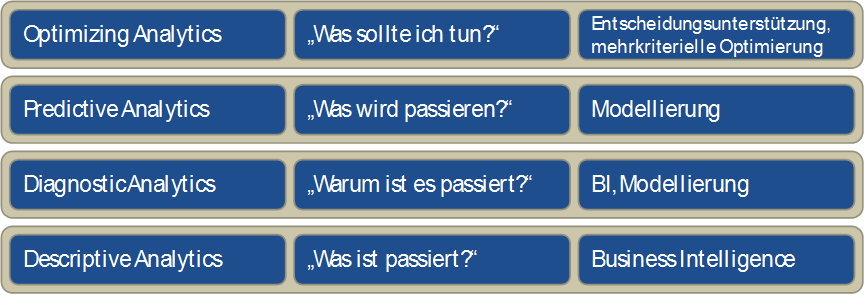

Gartner uses the term “prescriptive analytics” to explain most likely the highest amount of capability to generate business options on the basis of information based analyses. This is illustrated by the problem “what should I do?” as well as prescriptive analytics supplies the required decision making help, if a person is still involved, or perhaps perhaps automation if this is no longer the fact.

The levels below this, in ascending order in terms of the use and usefulness of AI and data science, are defined as follows: descriptive analytics (“what has happened?”), diagnostic analytics (“why did it happen?”), and predictive analytics (“what will happen?”) (see Figure 1). The last two levels are based on data science technologies, including data mining and statistics, while descriptive analytics essentially uses traditional business intelligence concepts (data warehouse, OLAP).

With this post, we need to change the phrase prescriptive analytics with the phrase optimizing analytics. The reason behind this’s that a technology could prescribe a lot of things, while, in phrases of implementation within a business, the goal is definitely to create something more efficiently with regard to target requirements or maybe quality criteria. This optimization may be supported by the search engines algorithms, like evolutionary algorithms in nonlinear instances as well as operation research (Or maybe) techniques within? a lot of rarer? linear circumstances. It is able to additionally be supported by application gurus that take the effects from the data mining progression and make use of them to draw conclusions concerning process improvement. One great example are actually the determination trees learned from information, which program professionals are able to understand, reconcile with the own expert knowledge of theirs, after which implement in an appropriate way. Right here too, the software is used for?optimizing?purposes, granted with an intermediate man step.

Within this particular context, another crucial part is the point that several key elements needed for the pertinent software usually have to be enhanced at exactly the same time, meaning that multi criteria optimization methods – or perhaps, more commonly, multi criteria decision making support methods – are essential. These approaches may then be utilized to be able to locate the absolute best compromises between conflicting objectives. The examples described include the often occurring conflicts between quality and cost, profit and risk, as well as, in a far more specialized instance, between the weight as well as passive occupant security of a body.

Figure 1: The four levels of data analysis usage within a company

These 4 levels create a framework, within that it’s possible to categorize information analysis competence and likely advantages for a business in general. This framework is actually depicted in Figure one and shows the 4 layers which build upon one another, combined with the respective technology category needed for implementation.

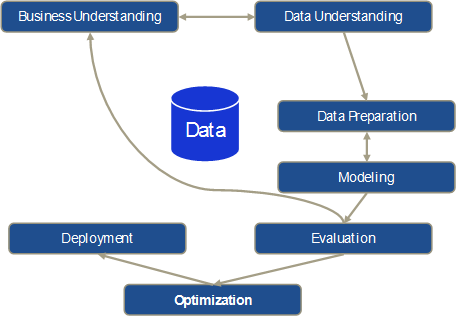

The traditional Cross-Industry Standard Process for Data Mining (CRISP-DM) includes no optimization or decision-making support whatsoever. Instead, based on the business understanding, data understanding, data preparation, modeling, and evaluation sub-steps, CRISP proceeds directly to the deployment of results in business processes. Here too, we propose an additional optimization step that in turn comprises multi-criteria optimization and decision-making support. This approach is depicted schematically in Figure 2.

Figure 2: Traditional CRISP-DM process with an additional optimization step

It is important to note that the original CRISP model deals with a largely iterative approach used by data scientists to analyze data manually, which is reflected in the iterations between business understanding and data understanding as well as data preparation and modeling. However, evaluating the modeling results with the relevant application experts in the evaluation step can also result in having to start the process all over again from the business understanding sub-step, making it necessary to go through all the sub-steps again partially or completely (e.g., if additional data needs to be incorporated).

The manual, iterative procedure is also due to the fact that the basic idea behind this approach – as up-to-date as it may be for the majority of applications – is now almost 20 years old and certainly only partially compatible with a big data strategy. The fact is that, in addition to the use of nonlinear modeling methods (in contrast to the usual generalized linear models derived from statistical modeling) and knowledge extraction from data, data mining rests on the fundamental idea that models can be derived from data with the help of algorithms and that this modeling process can run automatically for the most part – because the algorithm “does the work.”

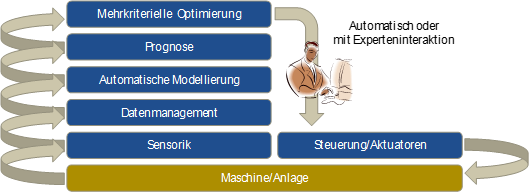

In applications where a substantial selection of models have to be produced, for instance for using within creating forecasts (e.g., sales letter forecasts for specific automobile models and marketplaces grounded on historical data), instant modeling plays a crucial role. The same is true for the usage of internet data mining, in what, for instance, forecast clothes airers (e.g., for forecasting merchandise quality) aren’t just always employed for a generation process, but likewise used (i.e., retrained) constantly anytime unique procedure elements alter (e.g., when a brand new raw material batch is actually used). This particular application type calls for the technical ability to immediately produce process, integrate, and data it in such a manner which data mining algorithms could be put on to it. Additionally, automatic optimization and automatic modeling are essential to be able to upgrade designs and use them as a foundation for generating ideal proposed actions in applications that are online. These activities may likewise be communicated to the procedure specialist as a suggestion or even – particularly in the situation of constant creation processes – be used immediately to manage the respective process. If sensor methods are also incorporated straight into the generation process – to gather information in time that is real – this results in a self learning cyber physical system that facilitates implementation on the Industry 4.0 vision inside the area of production engineering.

Figure 3: Architecture of an Industry 4.0 model for optimizing analytics

This strategy is actually depicted schematically in Figure three. Details from the method is actually acquired with the assistance of receptors and incorporated into the information management system. Using this as a foundation, forecast styles because of the system ‘s applicable outputs (quality, deviation coming from goal worth, procedure variance, etc.) are actually used constantly to be able to forecast the system ‘s result. Other machine learning alternatives may be used within that context in order, for instance, in order to predict maintenance benefits (predictive upkeep) or even to recognize anomalies in the progression. The corresponding models are actually monitored constantly and, in case needed, automatically retrained in case any system drift is actually observed. Lastly, the multi criteria optimization uses the designs to constantly compute optimum setpoints for the ca control. Human process professionals is able to additionally be incorporated here by utilizing the system as being a suggestion generator to ensure that a procedure expert could assess the generated ideas before they’re applied in the first system.

To be able to distinguish it from standard data mining, the phrase big data is often defined today with 3 (sometimes even 4 or perhaps five) important characteristics: volume, velocity, and variety, that typically refer to the significant volume of information, the pace at which information is actually produced, as well as the heterogeneity of the information to be examined, which may no longer be classified into the traditional relational data source schema.?Veracity, i.e., the point that big uncertainties might additionally be hidden in the information (e.g., measurement inaccuracies), as well as finally value, i.e., the valuation which the information and its examination symbolizes for a company’s company procedures, are usually cited as more characteristics. Thus it’s not simply the genuine data volume which distinguishes earlier data analytics techniques from big data, but additionally various other technical elements which call for the usage of new methods? for example Mapreduce and Hadoop? with appropriately adapted information analysis algorithms to be able to enable the information to be saved as well as refined. Additionally, so called in memory databases today also make it doable to use regular learning as well as modeling algorithms in primary memory to big data volumes.

Which means that in case one were establishing a hierarchy of information analysis as well as modeling methods, then, in extremely simplistic terms, stats will be a subset of information mining, which will be a subset of great data. The use of data mining or maybe big data technologies are required by not every software. Nevertheless, a clear pattern could be noticed, which shows that the essentials and possibilities active in the usage of data mining and large data are actually increasing at an extremely quick speed as increasingly big data volumes are actually being collected and also linked across all the procedures and departments of a business. Nevertheless, standard hardware architecture with more primary memory is usually a lot more than enough for studying big details volumes in the gigabyte span.

Although optimizing analytics is of remarkable value, it’s also essential to constantly be ready to accept the wide range of uses when working with artificial intelligence as well as machine learning algorithms. The broad range of search and learning strategies, with possible use in applications like reputation as well as words recognition, know-how learning, management and planning in areas like logistics and production, among others, may just be touched upon to the range of this article.

3 The pillars of artificial intelligence

An earlier meaning of man-made intelligence in the IEEE Neural Networks Council was “the analysis of how you can make computer systems do things at what, at the second, folks are actually better.”Although this still is true, recent investigation is centered on improving the way in which that software program does things at what computer systems have usually been better, like examining considerable amounts of information. Information is additionally the foundation for building artificially sensible software program systems not just to gather info, but also to:

- Learn

- Understand and interpret information

- Behave adaptively

- Plan

- Make inferences

- Solve problems

- Think abstractly

- Understand and interpret ideas and language

3.1 Machine learning

At the most general level, machine learning (ML) algorithms can be subdivided into two categories: supervised and unsupervised, depending on whether or not the respective algorithm requires a target variable to be specified.

Supervised learning algorithms

Apart from the enter variables (predictors), supervised mastering algorithms also call for the acknowledged goal values (labels) for an issue. To train an ML design to determine traffic signs with cameras, pictures of traffic signs – ideally with an assortment of configurations – are actually needed as input variables. With this situation, mild problems, perspectives, soiling, etc. are actually compiled as sound or perhaps blurring with the data; nevertheless, it should be feasible to identify a traffic sign in wet conditions with exactly the same precision as when the sun is actually up. The product labels, i.e., the proper designations, for such details are typically assigned manually. This proper set of enter variables and a training data set are constituted by their proper classification. Though we just have one picture per training information set in this specific situation, we still talk of many input variables, because ML algorithms find relevant capabilities in training information and find out how these functions as well as the class task for the classification process suggested in the example of this are actually associated. Supervised learning is actually used mainly to foresee numerical values (regression) as well as for distinction reasons (predicting the most appropriate class), and also the corresponding information isn’t restricted to a certain format – ML algorithms are actually much more compared to effective at processing videos, sound files, video clips, numerical details, as well as textual content. Classification examples include item recognition (traffic indicators, items in front of a car, etc.), facial skin recognition, credit risk evaluation, voice recognition, as well as buyer churn, to rap but a number of.

Regression examples include determining constant numerical values on the foundation of several (sometimes hundreds or maybe thousands) enter variables, like a self driving automobile calculating its perfect velocity on the foundation of street and ambient problems, determining a fiscal signal like gross domestic product grounded on a transforming selection of enter variables (use of arable acreage, public training levels, etc.), industrial production, and determining possible market shares with the creation of new versions. Each of these issues is extremely complicated and can’t be represented by easy, linear associations in simple equations. Or perhaps, to place it another way that far more accurately symbolizes the enormous struggle involved: the required expertise doesn’t actually exist.

Unsupervised learning algorithms

Unsupervised learning algorithms don’t concentrate on specific target variables, but rather have the aim of characterizing an information set in general. Unsupervised ML algorithms are usually employed to cluster (cluster) information sets, i.e., to recognize associations between specific data points (that may be made up of any amount of attributes) as well as team them into clusters. In specific instances, the output out of unsupervised ML algorithms may in turn be utilized as being an input for supervised solutions. Examples of unsupervised learning consist of forming buyer groups based on their purchasing behavior or maybe market data, or perhaps clustering time series to be able to cluster large numbers of time series from receptors into organizations which were in the past not obvious.

Put simply, machine learning is actually the region of man-made intelligence (AI) which allows computers to find out without becoming programmed explicitly. Machine learning focuses on creating programs which develop and change on their own as soon as completely new data is actually provided. Accordingly, tasks which may be represented inside a flowchart are not appropriate candidates for printer learning – in comparison, every aspect that calls for dynamic and changing cannot and solution strategies be constrained to fixed rules is likely ideal for solution with ML. For instance, ML is actually used when:

- No relevant human expertise exists

- People are unable to express their expertise

- The solution changes over time

- The solution needs to be adapted to specific cases

In contrast to stats, which uses the technique of making inferences grounded on samples, laptop science is actually interested in improving effective algorithms for solving optimization troubles, and also in building a representation of the product for evaluating inferences. Methods often used for seo in this context include so called “evolutionary algorithms” (genetic algorithms, evolution strategies), the basics of which emulate organic evolution. These techniques are extremely effective when used to complex, nonlinear seo issues.

Although ML is actually utilized in particular data mining programs, and both search for patterns in information, ML as well as data mining aren’t the exact same thing. Rather than removing information that individuals are able to understand, as well as the situation with information mining, ML techniques are actually utilized by programs to boost their very own understanding of the information provided. Software program which implements ML techniques recognizes patterns in information and can certainly dynamically correct the actions based on them. If, for instance, a self driving automobile (or maybe the software program which interprets the visual signal through the corresponding camera) has been taught to begin a braking maneuver when a pedestrian is found within front side it, this should work with all pedestrians regardless if they’re quite short, taller, body fat, slim, clothed, from the left, originating from the proper, etc. In turn, the automobile mustn’t brake in case there’s a stationary trash bin on the edge of the street.

The amount of complexity in the real life is usually higher compared to the amount of intricacy of an ML version, and that is why, in nearly all cases, an effort is created to subdivide difficulties into subproblems and next apply ML clothes airers to these subproblems. The paper coming from these designs is now incorporated to permit complicated jobs, for example autonomous automobile operation, in unstructured and structured environments.

3.2 Computer vision

Personal computer vision (CV) is an extremely large area of research which merges scientific theories from different fields (as is usually the situation with AI), beginning of biology, neuroscience, as well as psychology as well as extending all of the right way to laptop science, mathematics, as well as physics. For starters, it’s crucial that you find out exactly how an impression is created physically. Before light hits receptors in a two dimensional array, it’s refracted, or reflected, scattered, absorbed, and an image is actually made by measuring the intensity of light beams through each and every component in the picture (pixel). The 3 main focuses of CV are:

- Reconstructing a scene and the point from which the scene is observed based on an image, an image sequence, or a video.

- Emulating biological visual perception in order to better understand which physical and biological processes are involved, how the wetware works, and how the corresponding interpretation and understanding work.

- Technical research and development focuses on efficient, algorithmic solutions – when it comes to CV software, problem-specific solutions that only have limited commonalities with the visual perception of biological organisms are often developed.

All 3 areas overlap and influence one another. In case, for instance, the emphasis in an application is actually on obstacle recognition to begin an automated braking maneuver within the event of a pedestrian showing up in front of the automobile, the most crucial factor is usually to determine the pedestrian as being an obstacle. Interpreting the entire arena – e.g., knowledge that the automobile is actually moving towards a family members having a picnic in an area – isn’t needed in this particular situation. In comparison, understanding a scene is a vital prerequisite in case context is actually a pertinent feedback, such as is actually the situation when establishing domestic robots that have to learn that an occupant who’s lying on the floor not just represents an obstacle that must be evaded, but is additionally likely not sleeping in addition to a medical emergency is actually occurring.

Vision in biological organisms is regarded as an active process that includes controlling the sensor and is tightly linked to successful performance of an action. Consequently, CV systems are not passive either. In other words, the system must:

- Be continuously provided with data via sensors (streaming)

- Act based on this data stream

However, the aim of CV methods isn’t to know scenes in pictures – very first and foremost, the devices must extract the pertinent info for a certain undertaking from the scene. Which means that they have to find a “region of interest” which will be utilized for processing. Additionally, these methods should feature brief response times, because it’s probable that scenes changes in time and that a greatly delayed action won’t achieve the desired outcome. A number of techniques have been recommended for item recognition purposes (“what” is actually situated “where” inside a scene), including:

- Item detectors, in which situation a window moves above the picture along with a filter response is actually driven for every position by comparing the sub-image and a template (windowpane content), with every new object parameterization needing a separate scan. More advanced algorithms concurrently make calculations based on different scales and use filters which have been learned from a lot of pictures.

- Segment-based methods extract a geometrical explanation of an item by grouping pixels which determine the dimensions of an item in a picture. Based on that, a fixed element set is actually computed, i.e., the functions in the set retain exactly the same values even if subjected to different image transformations , like changes in mild conditions, scaling, and rotation. These characteristics are actually used to clearly recognize objects or maybe object classes, one instance being the above mentioned identification of automobile traffic signs.

- Alignment-based techniques use parametric object models which are taught on data. Algorithms hunt for parameters, for example rotation, translation, or scaling, which adapt an unit optimally to the corresponding characteristics in the picture, whereby an approximated remedy is located by ways of a reciprocal course of action, i.e., by options , like contours, corners, or maybe others, “selecting” characteristic areas in the picture for parameter treatments which are appropriate with the determined feature.

With object recognition, it’s essential to decide whether algorithms have to process 3-D or 2-D representations of items – 2 D representations are very often a great compromise between availability and accuracy. Recent exploration (deep learning) reveals that even distances between 2 points based on 2 2 D photos captured from various details could be correctly decided as an input. In daylight situations and with pretty good visibility, this particular feedback may be utilized in addition to information acquired with laser as well as radar gear to be able to improve accuracy – furthermore, a single digital camera is actually adequate to create the essential information. In contrast to 3 D objects, orientation information, depth, or no shape is directly encoded in 2 D images. Level can easily be encoded in a selection of methods, like with the usage of laser or maybe stereo cameras (emulating man vision) and structured lightweight methods (like Kinect). At present, the usage of superquadrics – geometric shapes defined with formulas, which use any number of exponents to identify buildings like cylinders, cubes, and cones with sharp edges or round are involved by the most intensively pursued research direction. This enables a huge variety of various basic shapes to be discussed with a little set of parameters. If 3 D images are actually acquired using stereo digicams, statistical approaches (such as producing a stereo stage cloud) are actually used rather than the previously shape based techniques, because the information quality attained with stereo cameras is actually poorer than that accomplished with laser scans.

Other research directions include tracking, contextual scene understanding,and monitorin, although these aspects are currently of secondary importance to the automotive industry.

3.3 Inference and decision-making

This particular area of investigation, referred to inside the literature as expertise representation & reason (KRR), concentrates on designing and building data structures as well as inference algorithms. Problems fixed by making inferences are really frequently present in uses which call for interaction with the actual physical planet (humans, for example), like producing diagnostics, preparation, processing organic languages, responding to questions, etc. KRR forms the foundation for AI at the man level.

Making inferences is actually the area of KRR where data based answers have to be discovered with no human intervention or maybe assistance, and also for which information is generally offered in a structured system with clear and distinct semantics. Since 1980, it’s been assumed that the information required is actually a combination of complex and simple buildings, with the former getting a minimal amount of computational complexity and developing the foundation for investigation involving large databases. The latter are actually provided in a language with a lot more expressive power, which calls for much less room for representation, & they match to generalizations and fine grained info.

Decision-making is a kind of inference that revolves largely around answering questions about preferences between activities, for instance when an autonomous agent tries to satisfy a process for an individual. Such choices are really often produced in a powerful url and that changes over the program of time when actions are actually performed. A good example of this’s a self driving automobile that must respond to changes in traffic.

Logic and combinatorics

Mathematical sense is actually the structured foundation for a lot of uses in the real life, such as calculation concept, the legal system of ours and corresponding arguments, as well as theoretical developments and proof in the area of development and research. The original vision was representing every kind of expertise in the form of reason and make use of common algorithms to make inferences coming from it, though a selection of problems arose – for instance, not all kinds of understanding may be represented simply. Moreover, compiling the expertise needed for complex applications could be extremely intricate, and it’s not easy to find out this kind of expertise in a rational, highly expressive language. In inclusion, it’s not simple to make inferences with the essential highly expressive language – within cases that are extreme, such scenarios can’t be implemented computationally, whether or not the very first 2 conflicts are actually overcome. Presently, there are actually 3 ongoing discussions on this topic, with the very first one concentrating on the argument that reason is actually not able to represent many ideas, like room, analogy, form, anxiety, etc., as well as consequently can’t be incorporated as an active component in developing AI to a man fitness level. The counterargument states that sense is absolutely among the many resources. At present, the blend of symbolic expressiveness, versatility, and clarity can’t be accomplished with every other method or perhaps process. The other debate revolves all around the argument this reason is simply too slow for making inferences and can as a result certainly not play a job in an effective system. The counterargument here’s that methods can be found to rough the inference process with reason, therefore processing is actually drawing in close proximity to staying within the necessary time limits, and development is actually getting made with regard to rational inference. Lastly, the third controversy revolves all around the argument that it’s incredibly hard, or perhaps extremely hard, to produce methods based on rational axioms into uses for the real life. The counterarguments within this debate are largely based on the study of men and women currently researching strategies for studying logical axioms from natural language texts.

In principle, a distinction is made between four different types of logic which are not discussed any further in this article:

- Propositional logic

- First-order predicate logic

- Modal logic

- Non-monotonic logic

Automated decision making, such as for instance that present in autonomous robots (vehicles), WWW elements, and marketing communications elements, is likewise well worth bringing up at this stage. This particular kind of decision making is especially pertinent with regards to representing expert decision making processes with reason and automating them. Very regularly, this particular kind of decision making procedure takes account of the characteristics of the surroundings, for instance every time a transport robot in a generation plant has to evade an additional transport robot. Nevertheless, this’s not a simple prerequisite, for instance, if a decision making practice without having a clearly defined direction is actually undertaken in long term, e.g., the determination to lease a factory at a certain cost at a certain location. Decision-making as an area of research includes numerous domains, like computer science, economics, psychology, and just engineering disciplines. Many fundamental issues have to be answered to allow development of automated decision making systems:

- Is the domain dynamic to the extent that a sequence of decisions is required or static in the sense that a single decision or multiple simultaneous decisions need to be made?

- Is the domain deterministic, non-deterministic, or stochastic?

- Is the objective to optimize benefits or to achieve a goal?

- Is the domain known to its full extent at all times? Or is it only partially known?

Logical decision making problems are actually non stochastic in nature as much as planning & conflicting behavior are actually concerned. Both require that the accessible info about the intermediate and initial states be done, that actions have solely deterministic, effects that are known, and that a particular defined goal exists. These problem sorts are usually used in the real life, for instance in robot management, logistics, complicated actions in the WWW, as well as in network and computer security.

Generally, planning issues consist of an original (known) circumstance, an identified aim, along with a pair of permitted transitions or actions between steps. The consequence of a setting up process is actually a sequence or maybe set of measures which, when performed properly, alter the executing entity from an original status to a state which meets the target situations. Computationally talking, planning is actually a hard issue, even if basic problem specification languages are actually used. Even when fairly simple issues are actually required, the hunt for a program can’t run through all state space representations, as these’re exponentially huge in the selection of states that determine the domains. For that reason, the aim is actually developing effective algorithms that represent sub representations to be able to browse through these with the optimism of attaining the relevant goal. Recent investigation is focused on creating new search techniques and brand new representations for states and actions, that will help make planning easier. Especially when one or even more agents acting against one another are actually taken into account, it’s essential to locate a balance between learning and also decision making – exploration for the benefit of learning while choices are now being made could lead to undesirable outcomes.

Many issues in the real world are actually issues with dynamics associated with a stochastic nature. An example of this’s purchasing a car with attributes that impact the value of its, of which we’re ignorant. These dependencies influence the purchasing decision, therefore it’s essential to enable uncertainties and risks to be considered. For all intents as well as applications, stochastic domains are far more complicated with regards to making choices, though they’re also more adaptable compared to deterministic domains with regard to approximations – quite simply, simplifying useful assumptions makes automated decision making possible in training. An excellent selection of trouble formulations are present, which may be used to represent a variety of areas and decision making processes to come down with stochastic domains, while using the best known being decision networks as well as Markov decision processes.

Many opportunities need a mix of logical (stochastic elements and non-stochastic), for instance when the influence of robots requires high level specifications in reason and low level representations for a probabilistic sensor version. Processing natural languages is yet another area in what this assumption is applicable, since high level knowledge in reason needs to be coupled with low level versions of written text and spoken signals.

3.4 Language and communication

In the area of man-made intelligence, processing dialect is actually regarded as to be of essential importance, with a difference being made here between 2 fields: computational linguistics (Natural language and cl) processing (NLP). In brief, the distinction is the fact that CL investigation concentrates on employing computer systems for words processing applications, while NLP consists of all the apps, which includes printer interpretation (MT), Q&A, paper summarization, info extraction, to name just a few. Put simply, NLP calls for a certain process and it is not a study discipline per se. NLP comprises:

- Part-of-speech tagging

- Natural language understanding

- Natural language generation

- Automatic summarization

- Named-entity recognition

- Parsing

- Voice recognition

- Sentiment analysis

- Language, topic, and word segmentation

- Co-reference resolution

- Discourse analysis

- Machine translation

- Word sense disambiguation

- Morphological segmentation

- Answers to questions

- Relationship extraction

- Sentence splitting

The core perspective of AI claims that a release of first order predicate logic (first order predicate calculus or maybe FOPC) supported through the required mechanisms for the respective trouble is actually adequate for representing knowledge and language. This thesis states that logic can and must supply the semantics root natural words. Though tries to make use of a type of rational semantics as the secret to representing contents are making improvement in the area of AI & linguistics, they’ve had very little results with regard to a system which could change English into proper logic. To date, the area of psychology has additionally failed to supply evidence that this particular kind of translation into common sense corresponds to the manner in which in which individuals shop and then adjust meaning. Consequently, the capability to convert a language into FOPC is still an elusive goal. Without having a doubt, there are actually NLP programs have to set logical inferences in between sentence representations, but in case these’re just one aspect of an application, it’s not clear that they’ve something to do with the basic significance of corresponding natural words (and for that reason with CL/NLP), because the first job for reasonable structures was inference. These along with other considerations have crystallized into 3 distinct positions:

- Position 1: Logical inferences are tightly linked to the meaning of sentences, because knowing their meaning is equivalent to deriving inferences and logic is the best way to do this.

- Position 2: A meaning exists outside logic, which postulates a number of semantic markers or primes that are appended to words in order to express their meaning – this is prevalent today in the form of annotations.

- Position 3: In general, the predicates of logic and formal systems only appear to be different from human language, but their terms are in actuality the words as which they appear

The launch of statistical and AI techniques into the area is actually the most recent phenomena within this context. The basic program is actually learning how language is actually processed – ideally in the manner that people do this, though this’s not a standard prerequisite. In phrases of ML, what this means is learning based on very large corpora which have been translated physically by humans. This usually would mean it’s essential to master (algorithmically) exactly how annotations are actually assigned or maybe exactly how part-of-speech categories (the distinction of text and punctuation marks in a book into word types) or maybe semantic markers or maybe primes are actually included to corpora, all grounded on corpora which have been ready by people (and are consequently correct). In the situation of supervised learning, as well as with guide to ML, it’s possible to discover possible associations of part-of-speech tags with terms which have been annotated by people in the book, such that this algorithms are in a position to annotate brand new, previously unfamiliar texts.  This works the exact same way for gently supervised as well as unsupervised learning, like when absolutely no annotations are created by humans and the one information given is actually a written text in a dialect with texts with the exact same contents in various languages or maybe when relevant clusters are actually discovered in thesaurus information without there to be an identified goal.  With regard to AI as well as words, info retrieval (IR) as well as info extraction (IE) have fun a significant role and correlate extremely strongly with one another. One of the primary things of IR is actually grouping texts based on the content of theirs, while IE extracts similarly factual components from texts or perhaps is actually used to have the ability to answer questions regarding text contents. These fields thus correlate really strongly with one another, because specific sentences (not just long texts) may also be regarded as documents. These techniques are actually used, for instance, in interactions between systems and users, like whenever a driver asks the on board computer system a question about the owner ‘s hand during a journey – as soon as the language input continues to be changed into textual content, the question ‘s semantic written content is actually used as the foundation for finding the solution in the mechanical, and subsequently for removing the solution and going back it to the car owner.

3.5 Agents and actions

In traditional AI, folks focused largely on specific, isolated software systems which acted fairly inflexibly to predefined guidelines. Nevertheless, new technologies and apps have established a necessity for synthetic entities which are much more versatile, adaptive, as well as autonomous, which act as community units in multi agent systems. In standard AI (see also bodily symbol process hypothesis?that has long been embedded directly into so called deliberative methods), an action concept that establishes how methods make choices and act is actually represented logically in specific devices that should execute actions. According to these rules, the device should confirm a theorem? the requirement here being that the method should get a description of the earth in that it presently finds itself, the preferred goal state, and a pair of measures, combined with the prerequisites for executing a list and these actions of the outcomes for every action. It turned out the computational complexity involved rendered some program with time limits useless also when dealing with easy issues, which had a huge effect on symbolic AI, leading to the improvement of reactive architectures. These architectures follow if then rules which translate inputs immediately into projects. Thought they are able to fix very complicated tasks, such methods are incredibly simple,. The issue is the fact that such methods learn methods rather compared to declarative awareness, i.e., they find out attributes that can’t easily be generalized for situations that are similar. Many attempts are made combining reactive and deliberative methods, though it seems it’s essential to concentrate sometimes on impractical deliberative systems and on very loosely created reactive methods? focusing on both isn’t ideal.

Principles of the new, agent-centered approach

The agent-oriented approach is characterized by the following principles:

- Autonomous behavior:

“Autonomy” describes the capability of methods to create their very own choices and execute things on behalf of the method designer. The aim is actually allowing systems to act autonomously in deep scenarios where controlling them right is difficult. Traditional software systems execute techniques after these options have been known as, i.e., they’ve absolutely no choice, whereas agents can make choices based on their intentions, desires, and beliefs (BDI).

- Adaptive behavior:

Because it’s not possible to foresee all of the situations which elements will encounter, these elements should be in a position to act flexibly. They have to be in a position to learn from as well as about the environment of theirs and adapt accordingly. This process is actually all of the more challenging if not just nature is actually a supply of uncertainty, though the agent is additionally a part of a multi agent system. Only environments which are self-contained and static not allow for an effective usage of BDI agents – for instance, reinforcement learning could be utilized to compensate for an absence of understanding of the planet. Within this particular context, elements are put in an atmosphere that’s discussed by a set of potential states. Each time an agent executes a measures, it’s “rewarded” by using a numerical value which expresses how good and bad the excitement was. This results in a number of rewards, actions, and states, and the agent is actually obligated to figure out a course of action which requires maximization of the incentive.

- Social behavior:

In an atmosphere in which various entities act, it’s needed for agents to identify the adversaries of theirs and form groups in case this’s needed by the same objective. Agent-oriented methods are actually utilized for personalizing pc user interfaces, as middleware, as well as in competitive events including the RoboCup. In a situation in which there are just self driving automobiles on roads, the person agent’s autonomy isn’t the only indispensable part? car2car marketing communications, i.e., the exchange of info between cars and acting as a number on this foundation, are just as vital. Coordination between the elements leads to an enhanced flow of site traffic, rendering accidents and traffic jams practically out of the question (see likewise section?5.1, Vehicles as autonomous, adaptive, as well as community agents & cities as super agents).

In summary, this agent-oriented approach is accepted within the AI community as the direction of the future.

Multi-agent behavior

Various approaches are being pursued for implementing multi-agent behavior, with the primary difference being in the degree of control that designers have over individual agents. A distinction is made here between:

- Distributed problem-solving systems (DPS)

- Multi-agent systems (MAS)

DPS methods let the designer to manage each private agent in the domain name, with the resolution to the job being distributed among several agents. In comparison, MAS units have many designers, each one of whom may only affect their personal agents without access to the look of any additional agent. With this situation, the look of this interaction protocols is very important. In DPS methods, agents jointly make an effort to attain a goal or even resolve a problem, while, within MAS methods, each agent is separately driven and wishes to realize its own personal purpose and optimize its own personal advantage. The objective of DPS analysis is finding cooperation strategies for problem solving, while reducing the amount of interaction necessary for that purpose. Meanwhile, MAS study is actually looking at synchronised interaction, i.e., just how autonomous agents could be brought to look for a typical foundation for interaction and undertake continuous actions. Ideally, a planet in that just self driving automobiles use the street will be a DPS community. Nevertheless, the present competition between OEMs implies that a MAS community is going to come into being first. Put simply, negotiation and communication between agents will require center phase (see additionally Nash equilibrium).

Multi-agent learning

Multi-agent studying (MAL) has just fairly recently been bestowed a specific amount of attention. The key issues in this specific place include figuring out which strategies must be utilized and what exactly “multi agent learning” means. Current ML techniques had been created to instruct specific agents, whereas MAL concentrates foremost and first on distributed learning. “Distributed” doesn’t always imply that a neural network is actually used, in which a lot of the same operations run during instruction and can certainly appropriately be parallelized, but rather that:

- A problem is split into subproblems and individual agents learn these subproblems in order to solve the main problem using their combined knowledge OR

- Many agents try to solve the same problem independently of each other by competing with each other

Reinforcement learning is one of the approaches being used in this context.

4 Data mining and artificial intelligence in the automotive industry

At a high level of abstraction, the value chain in the automotive industry can broadly be described with the following subprocesses:

- Development

- Procurement

- Logistics

- Production

- Marketing

- Sales, after-sales, and retail

- Connected customer

Each of these areas already features a significant level of complexity, so the following description of data mining and artificial intelligence applications has necessarily been restricted to an overview.

4.1 Development

Vehicle growth has become a primarily virtual method which is currently the established state of the art form for almost all manufacturers. CAD models as well as simulations (typically of actual physical tasks, like mechanics, etc., vibration, acoustics, flow, on the foundation of finite component models) are actually used extensively in all phases of the development activity.

The topic of seo (often with the usage of evolution strategies or hereditary algorithms as well as similar methods) is generally less well covered, though it’s exactly right here in the development activity that it is able to usually produce results that are impressive. Multi-disciplinary optimization, in that several growth martial arts disciplines (such as occupant brilliance as well as sound, vibration, as well as harshness (NVH)) are actually mixed and optimized together, is still seldom used in most cases due to allegedly too much computation time requirements. Nevertheless, exactly this strategy provides potential that is enormous with regards to agreeing far more efficiently and quickly across the departments concerned on a typical style which is actually ideal in terms of the demands of several departments.

As for further use and the analysis of simulation benefits, data mining is today being used often to generate co called “response surfaces.” In this particular application, data mining techniques (the whole spectrum, which range from linear versions to Gaussian processes, assistance vector devices, and arbitrary forests) are actually employed to be able to be taught a nonlinear regression type as being an approximation of this representation of this input vectors because of the simulation depending on the pertinent (numerical) simulation benefits. Since this particular unit must have excellent interpolation characteristics, cross validation strategies which provide the model ‘s prediction quality for brand new input vectors to be approximated are usually employed for instruction the algorithms. The objective behind this particular usage of supervised learning strategies is often to upgrade computation-time-consuming simulations with a quick approximation design which, for instance, presents a certain component and may be utilized in another application. Additionally, that allows time consuming adjustment procedures to be taken out faster and with higher transparency during development.

One example: It’s appealing to have the ability to instantly assess the forming feasibility of geometric variants in elements during the program of an interdepartmental conference rather than being forced to run complicated simulations and wait one or maybe 2 days for the outcomes. A response area design which has been earlier trained by using simulations may instantly present a really good approximation of the threat of increased thinning or maybe fractures in that kind of meeting, which may then be used instantly for evaluating the corresponding geometry.

These apps are often centered on or perhaps restricted to certain growth areas, that, among some other factors, is actually because of the point that simulation information management, in its function as a main screen between data development and data usage and studies, comprises a bottleneck. This applies particularly when simulation information is designed for use across model series, variants, and multiple departments, as is crucial for actual use of information in the sense of a constantly learning development organization. The present situation in training is actually that department specific simulation information is usually structured in the type of file trees within the respective file process within a department, making it hard to access for an analysis based on machine learning strategies. Additionally, simulation information might by now be extremely voluminous for a private simulation (in the assortment of terabytes for the current CFD simulations), therefore effective storage solutions are urgently needed for machine-learning-based analyses.

While simulation and also the usage of nonlinear regression designs restricted to specific programs have grown to be the standard, the chances provided by optimizing analytics are seldom being exploited. Especially with regard to such crucial issues as multi disciplinary (as well as cross departmental) machine learning, studying grounded on historical details (in additional words, learning from existing development projects for coming projects), and cross model learning, there’s an overwhelming and totally untapped potential for improving effectiveness.

4.2 Procurement

The procurement process utilizes a wide selection of information concerning suppliers, purchase costs, discounts, delivery dependability, per hour rates, raw content specifications, as well as other variables. Consequently, computing KPIs because of the goal of evaluating as well as ranking vendors poses no problem at all today. Data mining techniques allow the readily available information in order to be used, for instance, to produce forecasts, to determine crucial supplier attributes with probably the greatest influence on performance criteria, or perhaps to foresee delivery reliability. In phrases of optimizing analytics, the precise parameters that an automobile supplier is able to influence to be able to achieve the best possible circumstances are also significant.

Overall, the financial business place is actually a really good area for optimizing analytics, as the readily available information includes info about the company’s primary achievement factors. Continuous monitoring is worth a short mention as a good example, right here with guide to controlling. This monitoring is actually based on finance & controlling information, which is reported and continually prepared. This information may also be utilized in the feeling of predictive analytics to be able to immediately produce forecasts for the upcoming month or week. In phrases of optimizing analytics, analyses of major influencing details, together with indicated optimizing behavior, could likewise be added to the above mentioned forecasts.

These subject areas are more of a vision than a reality at present, but they do convey an idea of what could be possible in the fields of procurement, finance, and controlling.

4.3 Logistics

In the field of logistics, a distinction can be made between procurement logistics, production logistics, distribution logistics, and spare parts logistics.

Procurement logistics considers the procedure chain extending from the buying of items via to shipment of the content to the receiving factory. When you are looking at the purchasing of commodities, a huge amount of historical price info can be obtained for data mining applications, that may be used to produce cost forecasts and, in conjunction with delivery dependability data, to evaluate supplier performance. As for shipment, optimizing analytics can be utilized to recognize and enhance the key price components.

A comparable scenario is true to production logistics, that deals with preparation, controlling, and checking inner transportation, handling, as well storage processes. Based on the granularity of the readily available information, it’s possible to find bottlenecks, optimize stock amounts, and reduce the time needed, for instance here.

Distribution logistics works with all aspects involved in shipping merchandise to clients, and can easily refer to both used and new automobiles for OEMs. Since the main considerations below are the pertinent costs as well as delivery reliability, all of the subcomponents of multimodal supply chain must be taken into account – of rail to deliver and pickup truck transportation through to subaspects like the optimum mixture of specific automobiles on a truck. In terms of used vehicle logistics, optimizing analytics can be utilized to assign vehicles to unique distribution stations (e.g., auctions, Internet) on the foundation of a suitable, vehicle specific resale value forecast to be able to optimize total sale proceeds. GM implemented this method as far in the past as 2003 in conjunction with a forecast of anticipated vehicle specific sales revenue.

In extra parts strategies, i.e., the provision of extra parts as well as the storage of theirs, data driven forecasts of the selection of spare parts seeking to be kept in inventory based on model grow old and unit (or marketed volumes) are actually one necessary prospective program region for data mining, since it is able to substantially minimize the storage costs.

As the preceding instances show, optimization and data analytics must usually be fused with simulations in the area of logistics, since certain elements of the strategies chain importance to be simulated to be able to assess and optimize scenarios. Another example is actually the supplier network, that, when known in greater depth, may be utilized to recognize and stay away from serious paths in the strategies chain, in case possible. This’s very essential, as the malfunction of a supplier to create a delivery on the crucial path would lead to a generation stoppage for your automaker. Simulating the supplier network not just allows for this sort of bottleneck to be revealed, but additionally countermeasures to be enhanced. In order to facilitate a simulation that is as detailed and accurate as possible, experience has shown that mapping all subprocesses and interactions between suppliers in detail becomes too complex, as well as nontransparent for the automobile manufacturer, as soon as attempts are made to include Tier 2 and Tier 3 suppliers as well.

This’s the reason why data driven modeling must be viewed as an answer. When this strategy is actually used, a model is actually learned from the readily available info about the supplier networking (suppliers, solutions, dates, shipping and delivery periods, etc.) and also the strategies (stock amounts, delivery frequencies, generation sequences) by ways of data mining approaches. The unit may likewise be used as being a forecast type in order, for instance, to predict the consequences of a shipping and delivery delay for certain regions on the production activity. In addition, the usage of optimizing analytics in this particular situation makes it possible to do a worst case studies, i.e., to determine the components and vendors that would take about creation stoppages probably the fastest if their shipping and delivery were to be postponed. This example very evidently shows that optimization, inside the feeling of scenario analysis, could also be used to figure out the worst case situation for a car maker (and next to enhance countermeasures to come down with future).

4.4 Production

Every sub step of the production process is going to benefit from the regular utilization of data mining. It’s thus important for those manufacturing process parameters to get continually captured and stored. Because the primary objective of seo is normally to enhance quality or even minimize the incidence of defects, information related to the defects that arise and the kind of defect is actually needed, and this has to be feasible to clearly assign this information to the procedure parameters. This strategy could be utilized to achieve considerable improvements, especially in new kinds of production process – an instance being CFRP. Other prospective optimization areas consist of the throughput and energy consumption of a generation activity per period unit. Optimizing analytics can be used both online and offline with this context.

When used in offline programs, variables which have a major impact on the progression are identified by the analysis. Furthermore, correlations are actually derived in between these influencing variables as well as the targets of theirs (quality, etc.) as well as, if relevant, steps can also be produced from that, which may better the targets. Often, such analyses concentrate on a certain issue or maybe an immediate problem with the process and certainly will send an answer quite efficiently – however, they’re not targeted towards constant process optimization. Conducting the analyses and implementing and interpreting the outcomes regularly requires hand sub steps which could be completed by data scientists or maybe statisticians – typically in session with the respective course of action gurus.

In the situation of applications that are online, there’s an extremely substantial difference in the reality that the treatment is actually automated, resulting in totally unique challenges for information acquisition as well as integration, information pre processing, modeling, and seo. In these apps, including the provision of progression and quality information must be automated, as it offers integrated information which may be used as a foundation for modeling at every time. This’s essential given that modeling usually has to be carried out when changes to the procedure (including drift) are actually recognized. The ensuing forecast models are then applied instantly for optimization purposes and are actually able to, e.g., forecasting the quality as well as hinting (or exclusively implementing) behavior for optimizing the appropriate goal varied (quality in this particular case) a lot more. This setup of optimizing analytics, with automated modeling as well as optimization, is commercially offered, though it’s much more a vision compared to a reality for many people today.

The potential uses include things like forming technology (conventional also as for brand new materials), automobile body manufacture, corrosion safety, painting, drive trains, as well as last assembly, as well as can easily be adapted to other sub steps. An integrated evaluation of all the process actions, including an evaluation of all likely influencing elements and the impact of theirs on total quality, is additionally conceivable in potential – in this particular situation, it will be essential to incorporate the information from all subprocesses.

4.5 Marketing

The emphasis in advertising is reaching the end buyer as efficiently as you can as well as in order to persuade folks sometimes in order to be clients of the business or even in order to stay customers. The good results of marketing activities could be assessed in sales figures, by which it’s essential to differentiate marketing results from various other consequences, such as the common economic problem of clients. Measuring the good results of marketing activities could as a result be a complicated endeavor, because multivariate influencing elements might be engaged.

It’d likewise be ideal if optimizing analytics could regularly be utilized in marketing, because seo objectives, like maximizing return businesses from a marketing actions, maximizing product sales figures while reducing the finances employed, optimizing the advertising mix, as well as optimizing the purchase in which elements are actually done, are actually all important concerns. Forecast models, like those for predicting more sales figures with time as a consequence of a certain advertising plan, are just one element of the essential data mining results – multi criteria decision making assistance additionally plays a decisive job in this particular context.

2 exceptional examples of the usage of data mining in advertising are actually the problems of churn (buyer turnover) as well as customer loyalty. In a saturated sector, the best concern for automakers is actually preventing loss in tailor, i.e., to arrange as well as implement optimal countermeasures. This requires info that’s as individualized as possible related to the buyer, the consumer group to which the buyer belongs, the buyer’s experience and satisfaction with their present car, as well as information concerning competitors, the models of theirs, and prices. Because of the subjectivity of several of this information (e.g., fulfillment surveys, ) is valued by individual satisfaction, individualized churn predictions as well as optimum countermeasures (e.g., personalized special discounts, refueling or maybe cash rewards, bonuses based on extra features) are actually a complicated issue that’s constantly pertinent.

Because maximum data confidentiality is actually assured and no private information is actually captured – unless the buyer provides the explicit consent of theirs in order to get offers as separately tailored as practical – such analyses as well as optimizations are only likely at the amount of customer segments that will stand for the qualities of an anonymous customer subset.

Customer loyalty is strongly connected to this particular subject matter, as well as takes aboard the question of exactly how to keep as well as optimize, i.e., improve the loyalty of existing clients. Furthermore, the subject of “upselling,” i.e., the thought of offering existing customers a higher value automobile as their upcoming one and achieving success with this particular offer, is constantly connected with that. It’s apparent that such problems are complicated, as info about customer segments, advertising campaigns are required by them, as well as correlated sales successes to be able to facilitate analysis. Nevertheless, this information is normally unavailable, hard to gather systematically, and recognized by different amounts of veracity, i.e., anxiety in the information.

Similar considerations utilize to optimizing the advertising mix, like the problem of trade fair involvement. With this situation, information must be collected over longer times of time, to ensure that it may be evaluated and conclusions could be drawn. For specific advertising campaigns including mailing promotions, evaluating the return industry speed with regard to the qualities of the selected target team is actually a more likely goal of an information analysis and corresponding campaign seo.

In principle, extremely promising likely uses for optimizing analytics can be also discovered in the advertising field. Nevertheless, the complexity needed in data collection and information safety, in addition to the partial inaccuracy of information collected, means that a long term strategy with thorough preparation of the information collection approach is actually needed. The matter becomes a lot more complicated if “soft” things including brand image also have to be taken into consideration in the data mining procedure – in this particular situation, all information has a certain amount of uncertainty, and also the corresponding analyses (“What are actually the most crucial brand image drivers?” “How could the brand impression be improved?”) are definitely more appropriate for identifying trends than inhaling quantitative conclusions. Nevertheless, within the range of optimization, it’s possible to figure out whether an action is going to have a negative or positive influence, therefore permitting the path to be identified, in what activities ought to go.

4.6 Sales, after-sales, and retail

The range of possible applications and existing uses in this specific place is significant. Since the “human factor,” embodied through the conclusion buyer, plays a vital job within this context, it’s not just essential to take into consideration objective data like sales figures, individual priced special discounts, as well dealer campaigns; very subjective customer information such as for instance client satisfaction analyses based on third-party market studies or surveys covering such subject matter as brand image, break down fees, brand name loyalty, and a lot others might also be needed. At exactly the same time, it’s frequently required to procure and incorporate a range of information sources, cause them to become accessible for studies, and eventually analyze them properly in conditions of the prospective subjectivity of the evaluations – a method which currently depends to a significant degree on the expertise on the information scientists doing the analysis.

The area of sales itself is directly intermeshed with marketing. All things considered, the ultimate goal is actually measuring the success of advertising tasks in terminology of turnover grounded on sales figures. A combined evaluation of advertising activities (including division among specific media, positioning frequency, expense of the respective marketing tasks, etc. sales and) could be utilized to enhance promote activities in terms of effectiveness and cost, in which case a portfolio based strategy is definitely used. This means the maximum choice of a profile of promotional activities as well as their scheduling – and never simply concentrating on a single advertising activity – is actually the primary priority. Accordingly, the issue here will come from the area of multi criteria decision making assistance, in what decisive breakthroughs are made in recent years because of the usage of evolutionary algorithms and fresh, portfolio based optimization criteria. Nevertheless, uses in the motor vehicle industry continue to be restricted to a rather minimal scope.

Similarly, customer responses, extended warranty repairs, and creation are potentially intermeshed also, since customer satisfaction could be utilized to derive soft factors as well as warranty fixes could be used to derive hard elements, which may then be coupled with vehicle specific production information and examined. This way, elements which impact the occurrence of quality defects not current or perhaps foreseeable at the factory could be driven. It is then possible to forecast some quality defects as well as use optimizing analytics to reduce the occurrence of theirs. Nevertheless, it’s also essential to mix information from totally different parts – after-sales, warranty, and production – to make it accessible to the evaluation.

In the situation of second hand automobiles, residual value plays a crucial job inside a business’s rental car business or fleet, since the corresponding volumes of tens of a huge number of vehicles are actually typed in straight into the sense of balance sheet as assets using the corresponding residual worth. Today, OEMs usually transport this threat to banks or even leasing companies, though these businesses might in turn be a part of the OEM’s corporate team. Data mining and, earlier all, predictive analytics are able to perform a decisive function here in the appropriate analysis of assets, as proven by an American OEM so long as 10 years back. Nonlinear forecasting models may be used with the company’s personal product sales information to produce individualized, equipment specific residual value forecasts with the automobile amount, which are a lot more precise compared to the designs still offered as a sector standard. This can make it possible to enhance distribution channels – flat as much as geographically assigning used cars to individual auction websites at the automobile amount – in such a manner as to optimize a company ‘s general sales achievements on a worldwide basis.

Considering sales businesses in greater detail, it’s apparent that knowledge about each individual customer’s preferences and interests when purchasing a car or perhaps, in long term, temporarily utilizing vehicles that are available, is actually a crucial component. The greater personalized the knowledge related to the sociodemographic aspects for a buyer, the buying behavior of theirs, or perhaps maybe even their clicking conduct on the OEM’s site, in addition to their driving individual use and behavior of a car, the more effectively it is going to be possible to address the needs of theirs and also supply them with an optimum proposal for a car (suitable model with proper equipment features) as well as its financing.

4.7 Connected customer

While this phrase isn’t yet developed as a result at current, it does explain a potential future in which both the consumer and their automobile are completely incorporated with state-of-the-art info technology. This particular part is strongly connected to advertising and sales problems, like client loyalty, personalized pc user interfaces, automobile behavior generally, and any other visionary elements (see additionally section 5). With a link with the Internet as well as by making use of smart algorithms, a car is able to react to spoken search and commands for information that, for instance, can easily talk directly with the navigation structure and replace the destination. Communication between cars makes it possible to gather and exchange info on traffic and road conditions, which is a lot more exact as well as up-to-date than that which may be obtained via centralized methods. An example is actually the development of black ice, and that is typically fairly localized & temporary, and which could be recognized as well as communicated in the type of a warning to various other vehicles very quickly today.

5 Vision

Vehicle development already tends to make use of “modular systems” that permit elements to be utilized across many style series. At exactly the same time, development cycles are actually starting to be increasingly shorter. Nevertheless, the area of virtual vehicle growth hasn’t yet seen any successful efforts to make use of machine learning techniques to be able to facilitate automatic learning which extracts both information that’s built upon various other historical understanding and understanding that applies to much more than a single design series and so as to help with upcoming development projects and planning them much more effectively. This subject is firmly intermeshed with that of information management, the intricacy of data mining as part of simulation and seo data, and the issues in defining an appropriate representation of knowledge regarding vehicle development aspects. In addition, this strategy is actually restricted by the organizational limits of the vehicle growth process, which is typically still entirely oriented towards the unit being developed. Additionally, as a result of the heterogeneity of information (often numerical info, but additionally videos and images, e.g., with flow fields) as well as the volume of information (now within the terabyte range for an one-time simulation), the problem of “data mining within simulation data” is incredibly complicated as well as, at very best, the item of tentative investigation methods at the time.

New solutions are starting to be feasible as a result of the usage of predictive maintenance. Automatically learned information concerning individual driving conduct – i.e., yearly, seasonal, or maybe perhaps monthly mileages, and the kind of operating – may be utilized to forecast intervals for needed maintenance tasks (brake pads, oil, filters, etc.) with superb accuracy. Motorists are able to make use of this info to plan garage appointments in an appropriate fashion, and the perspective of a car that will plan garage meetings by itself in control with all the driver ‘s calendar – that is actually accessible to the on board computer via right protocols – is now much more reasonable as opposed to the often cited refrigerator that instantly reorders groceries.

In conjunction with automated optimization, the nearby authorized repair store, in its function as a main coordination point where private automobile service requests arrive via the ideal telematics interface, may optimally schedule service meetings in time that is real – keeping workloads as uniformly distributed as practical while taking staff accessibility into account, for instance.