Artificial Intelligence (AI) and Machine Learning (ML) innovation are developing at a rapid speed, with conceivable results developing in tandem with a lot more noteworthy accessibility of headways and data in computing capability and storage solutions. Honestly, in case you look in the background, you are able to notice numerous situations of machine learning innovation as of now currently in a wide range of industries ranging from social media and consumer products to financial services and manufacturing.

Machine learning is able to develop into a strong analytical tool for huge volumes of data. The fusion of machine learning and edge computing is able to channel a large component of the commotion gathered by IoT gadgets and leave the substantial data to be examined by the edge and cloud analytics engines.

The developments in Artificial Intelligence have empowered us to observe self-driving automobiles, speech recognition, effective web search, as well as facial and image recognition. Machine learning is definitely the establishment of these frameworks. It’s really unavoidable nowadays that we probably use it a lot of times each day without realizing it.

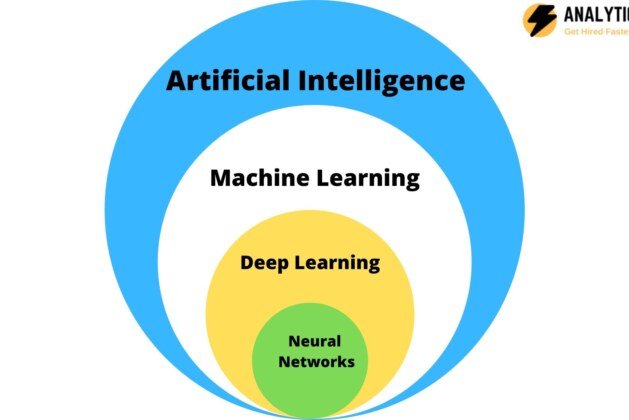

Machine learning algorithms, especially deep learning neural networks often create models which enhance the exactness of forecast. However, the accuracy comes to the detriment of memory utilization and increased computation. A deep learning algorithm, called as a model, includes levels of computations where a substantial selection of parameters are processed in each level and then went to the following, iteratively. The bigger the dimensionality of the data (e.g., a high-resolution picture), the higher the computational need is. GPU farms in the cloud are constantly utilized to satisfy these computational necessities.

Edge processing is a distributed computing worldview that brings computation and data stockpiling nearer to the spot just where it’s needed, to enhance response times and spare bandwidth. Despite the reality that edge computing addresses connectivity, security challenges, scalability and latency, the computational advantage necessities for deep learning types at the edge gadgets are hard to fulfill in smaller gadgets.

Organizations today store their data in the cloud. This means data needs to go to a central data center, which is regularly discovered a huge number of miles away, for model comparison before the concluding awareness may be transferred back again to the gadget of birthplace. This’s a crucial and perhaps perilous concern in cases, for instance, fall detection where time is of the essence.

The problem of latency is what is driving many organizations to transfer using the cloud to the edge today. Insight on the edge, Edge AI or Edge machine learning means that, instead of being processed in algorithms situated in the cloud, information is processed locally in algorithms that decide to put away holding a hardware gadget. This empowers real-time tasks, nonetheless, it similarly serves to fundamentally lower the energy utilization and security vulnerability associated with processing data in the cloud.

Before choosing the kind of hardware for edge gadgets, it’s crucial to build up performance measurements that are key for the induction. At a substantial level, the key functionality measurements for machine learning on the advantage may be reported as latency, throughput, power usage by the devices, and accuracy. The latency alludes to the point it takes to collect a single data point, throughput is the amount of derivation calls each and every second, and precision is the self-confidence amount of this hope yield needed by the utilization case.

Analysts have found out that lessening the amount of parameters in deep neural network models assist decline the computational assets needed for model inference. A few well-known designs which have used such devices with probably the least (or maybe no) accuracy degradation are YOLO, MobileNets, Solid-State Drive (SSD), and also SqueezeNet. Large amounts of these pre-prepared models are accessible to obtain and use in open source platforms, for example, PyTorch or TensorFlow.

Another era of purpose-built accelerators is rising as startups and chip producers work to accelerate and improve the things at hand interested with AI and machine learning projects, moving from instruction to inferencing. Faster, scalable, more power-proficient and less expensive. These accelerators guarantee to help edge gadgets to another degree of efficiency. Among the manners in which they achieve this is by mitigating edge gadgets’ central processing devices of the perplexing and overwhelming mathematical labor connected with running deep learning models.

At the stage when we check out history and where we are today, seemingly, the improvement of edge machine learning is relentless and quick. As succeeding advances hold on unfurling, get all set for impact and make certain you are prepared to make the most of the chances this innovation brings.