Decision Tree Analysis is a basic, predictive modeling tool that has applications spanning a variety of different areas. Generally, decision trees are built via an algorithmic strategy that identifies methods to split a data set based on different conditions. It’s among the most commonly used and useful techniques for supervised learning. It is a non-parametric supervised learning method used for both regression and classification. The aim is creating a design that predicts the value of a target adjustable by learning simple decision rules inferred from the data features.

The decision rules are in the form of if-then-else statements. The deeper the tree, the much more complicated the rules and fitter the model.

Terminologies used in Decision tree:

- Instances – attributes that define the input.

- Attributes – it defines an instance.

- Concept – a function used to map an input to output.

- Target Concept – the result of the input i.e. the actual answer.

- Candidate Concept – it is a concept that we think is the actual target concept.

- Hypothesis Class – Set of all the possible functions.

- Sample – A set of inputs with a label, also known as the training set.

- Testing Set – used to test the candidate concept and determine its performance.

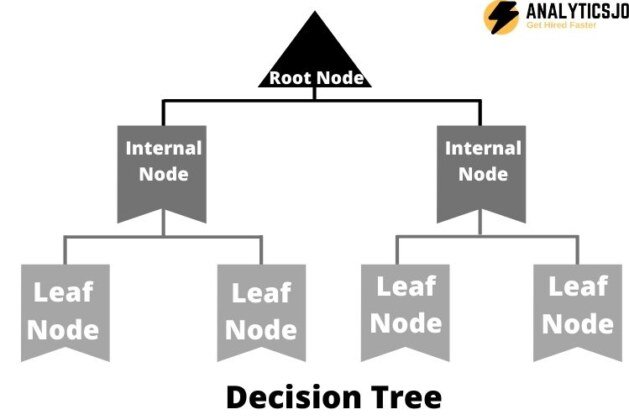

A decision tree is a tree-like graph with nodes that represent the location where we pick an attribute, edges represent the answer to the question and the leaves represent the real output or class label. They’re used in non-linear decision making with a simple linear decision surface.

Decision trees classify the cases by sorting them down the tree in the root to several leaf nodes, the leaf node providing the classification of the cases. Each and every node in the tree functions as a test case for some attribute, and every edge descending out of that node corresponds to 1 of the possible output to the test case. This procedure is recursive in nature and is repeated for each subtree rooted at the new nodes.

Constructing a Decision Tree:

A tree could be “learned” by splitting the resource set into subsets depending on an attribute value test. This procedure is repeated on every derived subset in a recursive manner known as recursive partitioning. The recursion is completed once all the subset at a node has the same value as of the target variable, or when splitting no longer adds worth to the predictions. The construction of decision tree classifier doesn’t involve any domain knowledge or parameter setting, and thus is appropriate for exploratory knowledge discovery. Decision trees are able to handle high dimensional data. A decision tree classifier has good accuracy. Decision tree induction is a normal inductive strategy to learn knowledge on classification.

A simple classification of Decision Tree:

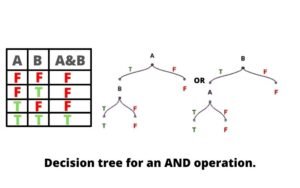

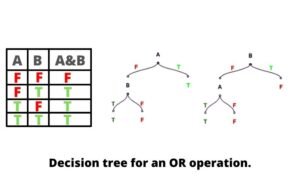

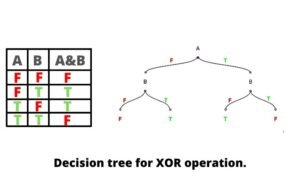

To make it simple to understand, a decision tree can be used to represent any Boolean function of the input attributes. Let’s analyze a decision tree example for three Boolean gates AND, OR, XOR.

- Boolean AND

In the above figure, we can see that there are 2 candidate concepts for making the decision tree that runs the And operation. Likewise, we can make a decision tree that performs the boolean OR or XOR operation.

- Boolean OR

- Boolean XOR

Strengths and Weakness of the Decision Tree

Strengths- It is capable of generating understandable rules.

- It performs classification with a little computational.

- It can handle both continuous as well as categorical variables.

- It provides a clear picture of the fields that are important for prediction or classifications

Weakness

- Decision trees are less appropriate for estimations in which the objective is predicting the value of a consistent attribute.

- Decision trees are susceptible to mistakes in classification problems with many classes and a relatively small number of training examples.

- It may be computationally expensive to train. The method of growing a decision tree is computationally costly. At every node, each applicant splitting field has to be sorted before its best split may be found. In certain algorithms, combinations of fields are employed and research has to be made for maximum combining weights. Pruning algorithms may also be expensive since many candidate sub-trees must be created and compared.