If you want to learn one of the most in-demand programming languages in the world… you’re in the right place.

By the end of this guide, you’ll have a strong foun, even if you’ve never programmed before. Let’s jump right in!

Table of Contents

- Install Anaconda

- Open Jupyter Notebook

- Start New Notebook

- Try Math Calculations

- Import Data Science Libraries

- Import Your Dataset

- Explore Your Data

- Clean Your Dataset

- Engineer Features

- Train a Simple Model

- Next Steps

Step 1: Install Anaconda

We strongly recommend installing the Anaconda Distribution, which includes Python, Jupyter Notebook (a lightweight IDE very popular among data scientists), and all the major libraries.

It’s the closest thing to a one-stop-shop for all your setup needs.

Simply download Anaconda with the latest version of Python 3 and follow the wizard:

Step 2: Start Jupyter Notebook

Jupyter Notebook is our favorite IDE (integrated development environment) for data science in Python. An IDE is just a fancy name for an advanced text editor for coding.

(As an analogy, think of Excel as an “IDE for spreadsheets.” For example, it has tabs, plugins, keyboard shortcuts, and other useful extras.)

The good news is that Jupyter Notebook already came installed with Anaconda. Three cheers for synergy! To open it, run the following command in the Command Prompt (Windows) or Terminal (Mac/Linux):MS DOS

| 1 | jupyter notebook |

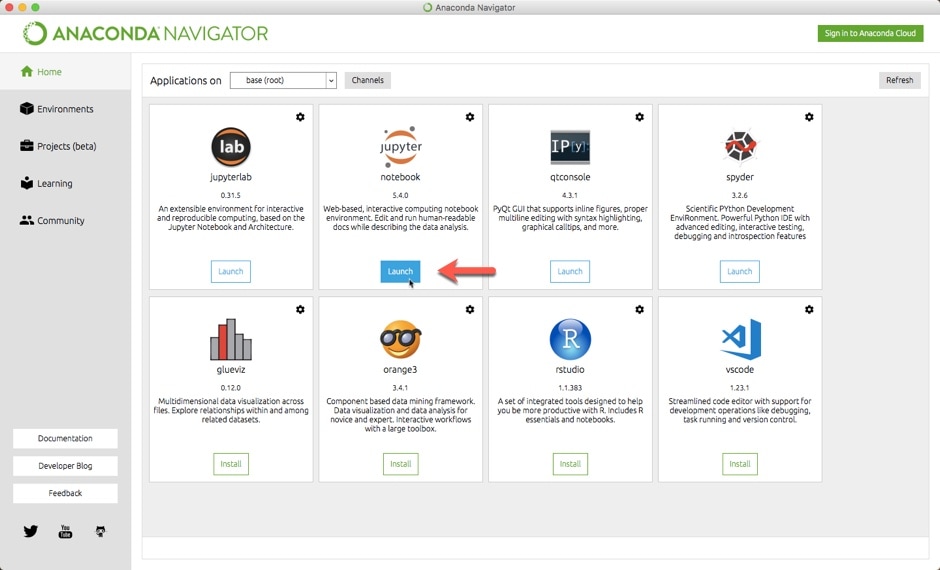

Alternatively, you can open Anaconda’s “Navigator” application, and then launch the notebook from there:

You should see this dashboard open in your browser:

*Note: If you get a message about “logging in,” simply follow the instructions in the browser. You’ll just need to paste in a token from the Command Prompt/Terminal.

Step 3: Open New Notebook

First, navigate to the folder you’d like to save the notebook in. For beginners, we recommend having a single “Data Science” folder that you can use to store your datasets as well.

Then, open a new notebook by clicking “New” in the top right. It will open in your default web browser. You should see a blank canvas brimming with potential:

Step 4: Try Math Calculations

Next, let’s write some code. Python is awesome because it’s extremely versatile. For example, you can use Python as a calculator:Python for calculationsPython

| 12345678910 | import math # Area of circle with radius 525*math.pi # Two to the fourth2**4 # Length of triangle’s hypotenusemath.sqrt(3**2 + 4**2) |

(To run a code cell, click into the cell so that it’s highlighted and then press Shift + Enter on your keyboard.)

A few important notes:

- First, we imported Python’s math module, which provides convenient functions (e.g.math.sqrt()) and math constants (e.g. math.pi).

- Second, 2*2*2*2… or “two to the fourth”… is written as 2**4. If you write 2^4, you’ll get a very different output!

- Finally, the text following the “hashtags” (#) are called comments. Just as their name implies, these text snippets are not run as code.

In addition, Jupyter Notebook will only display the output from final line of code:

To print multiple calculations in one output, wrap each of them in the print(…) function.Python’s print functionPython

| 12345678 | # Area of circle with radius 5print( 25*math.pi ) # Two to the fourthprint( 2**4 ) # Length of triangle’s hypotenuseprint( math.sqrt(3**2 + 4**2) ) |

Another useful tip is that you can store things in objects (i.e. variables). See if you can follow along what this code is doing:Python objects (variables)Python

| 123 | message = “The length of the hypotenuse is”c = math.sqrt(3**2 + 4**2)print( message, c ) |

By the way, in the above code, the message was surrounded by quotes, which means it’s a string. A string is any sequence of characters surrounded by single or double quotes.

Now, we’re not going to dive much further into the weeds right now.

Contrary to popular belief, you won’t actually need to learn an immense amount of programming to use Python for data science. That’s because most of the data science and machine learning functionality you’ll need are already packaged into libraries, or bundles of code that you can import and use out of the box.

Step 5: Import Data Science Libraries

Think of Jupyter Notebook as a big playground for Python. Now that you’ve set this up, you can play to your heart’s content. Anaconda has almost all of the libraries you’ll need, so testing a new one is as simple as importing it.

Which brings to the next step… Let’s import those libraries! In a new code cell (Insert > Insert Cell Below), write the following code:Import data science librariesPython

| 123456 | import pandas as pd import matplotlib.pyplot as plt%matplotlib inline from sklearn.linear_model import LinearRegression |

(It might take a while to run this code the first time.)

So what did we just do? Let’s break it down.

- First, we imported the Pandas library. We also gave it the alias of pd. This means we can evoke the library with pd. You’ll see this in action shortly.

- Next, we imported the pyplot module from the matplotlib library. Matplotlib is the main plotting library for Python. There’s no need to bring in the entire library, so we just imported a single module. Again, we gave it an alias of plt.

- Oh yea, and the %matplotlib inline command? That’s Jupyter Notebook specific. It simply tells the notebook to display our plots inside the notebook, instead of in a separate screen.

- Finally we imported a basic linear regression algorithm from scikit-learn. Scikit-learn has a buffet of algorithms to choose from. At the end of this guide, we’ll point you to a few resources for learning more about these algorithms.

There are plenty of other great libraries available for data science, but these are the most commonly used.

Step 6: Import Your Dataset

Next, let’s import a dataset. Pandas has a suite of IO tools that allow you to read and write data. You can work with formats such as CSV, JSON, Excel, SQL databases, or even raw text files.

For this tutorial, we’ll be reading from an Excel file that has data on the energy efficiency of buildings. Don’t worry – even if you don’t have Excel installed, you can still follow along.

First, download the dataset and put it into the same folder as your current Juptyer notebook.

Then, use the following code to read the file and store its contents in a df object (“df” is short for dataframe).Read Excel datasetPython

| 1 | df = pd.read_excel( ‘ENB2012_data.xlsx’ ) |

If you saved the dataset in a subfolder, then you would write the code like this instead:Read dataset from subfolderPython

| 1 | df = pd.read_excel( ‘subfolder_name/ENB2012_data.xlsx’ ) |

Nice! You’ve successfully imported your first dataset using Python.

To see what’s inside, just run this code in your notebook (it displays the first 5 observations from the dataframe):View example observationsPython

| 1 | df.head() |

For extra practice on this step, feel free to download a few others from our hand-picked list of datasets. Then, try using other IO tools (such as pd.read_csv()) to import datasets with different formats.

Step 7: Explore Your Data

In step 6, we already saw some example observations from the dataframe. Now we’re ready to look at plots.

We won’t go through the entire exploratory analysis phase right now. Instead, let’s just take a quick glance at the distributions of our variables. We’ll start with the “X1” variable, which refers to “Relative Compactness” as described in the file’s data dictionary.Plot histogramPython

| 1 | plt.hist( df.X1 ) |

As you’ve probably guessed, plt.hist() produces a histogram.

In general, these types of functions will have different parameters that you can pass into them. Those parameters control things like the color scheme, the number of bins used, the axes, and so on.

There’s no need to memorize all of the parameters. Instead, get in the habit of checking the documentation page for available options. For example, the documentation page of plt.hist()indicates that you can change the number of bins in the histogram:

That means we can change the number of bins like so:Plot parametersPython

| 1 | plt.hist( df.X1, bins=5 ) |

For now, we don’t recommend trying to get too fancy with matplotlib. It’s a powerful, but complex library.

Instead, we prefer a library that’s built on top of matplotlib called seaborn. If matplotlib “tries to make easy things easy and hard things possible”, seaborn tries to make a well-defined set of hard things easy as well.

Step 8: Clean Your Dataset

After we explore the dataset, it’s time to clean it. Fortunately, this dataset is pretty clean already because it was originally collected from controlled simulations.

Even so, for illustrative purposes, let’s at least check for missing values. You can do so with just one line of code (but there’s a ton of cool stuff packed into this one line).Check for missing valuesPython

| 1 | df.isnull().sum() |

Let’s unpack that:

- df is where we stored the data. It’s called a “dataframe,” and it’s also a Python object, like the variables from Step 4.

- .isnull() is called a method, which is just a fancy term for a function attached to an object. This method looks through our entire dataframe and labels any cell with a missing value as True. (Tip: Try running df.head().isnull() and see what you get!)

- Finally, .sum() is a method that sums all of the True values across each column. Well… technically, it sums any number, while treating True as 1 and False as 0.

You can learn more about .isnull() and .sum() on the documentation page for Pandas dataframes.

Step 9: Engineer Features

Feature engineering is typically where data scientists spend the most time. It’s where you can use “domain knowledge” to create new input features (i.e. variables) for your models, which can drastically improve their performance.

Let’s start with a low-hanging fruit: creating dummy variables.

Typically, you’ll have two types of features: numerical and categorical…

- Numerical ones are pretty self-explanatory… For example, “number of years of education” would be a numerical feature.

- Categorical features are those that have classes instead of numeric values…. For example, “highest education level” would be a categorical feature, and the classes could be: [‘high school’, ‘some college’, ‘college’, ‘some graduate’, ‘graduate’].

In that example, the “highest education level” categorical feature is also ordinal. In other words, its classes have an implied order to them. For example, [‘college’] implies more schooling than [‘high school’].

A problem arises when categorical features are not ordinal. In fact, we have this problem in our current dataset.

If you remember from its data dictionary, features X6 (Orientation) and X8 (Glazing Area Distribution) are actually categorical. For example, X6 has four possible values:Numerical encoding of categorical featurePython

| 1234 | 2 == ‘north’,3 == ‘east’,4 == ‘south’,5 == ‘west’ |

However, in the current way it’s encoded (i.e. as four integers), an algorithm will interpret “east” as “1 more than north” and “west” as “2 times the value east.”

That doesn’t make sense, right?

Therefore, we should create dummy variables for X6 and X8. These are brand new input features that only take the value of 0 or 1. You’d create one dummy per unique class for each feature.

So for X6, we’d create four variables—X6_2, X6_3, X6_4, and X6_5—that represent its four unique classes. We can do this for both X6 and X8 in one fell swoop:Create dummy variablesPython

| 1 | df = pd.get_dummies( df, columns = [‘X6’, ‘X8’] ) |

(Tip: after running this code, trying running df.head() again. Is it what you expected?)

Step 10: Train a Simple Model

Have you been following along? Great!

After just a few short steps, we’re actually ready to train a model. But before we jump in, just a quick disclaimer: we won’t be using model training best practices for now. Instead, this code is simplified to the extreme. But it’s super helpful to start with these “toy problems” as learning tools.

Before we do anything else, let’s split our dataset into separate objects for our input features(X) and the target variable (y). The target variable is simply what we wish to predict with our model.

Let’s predict “Y1,” a building’s “Heating Load.”Separate input features and target variablePython

| 12345 | # Target variabley = df.Y1 # Input featuresX = df.drop( [‘Y1’, ‘Y2’ ], axis=1) |

In the first line of code, we’re copying Y1 from the dataframe into a separate y object. Then, in the second line of code, we’re copying all of the variables except Y1 and Y2 into theX object.

.drop() is another dataframe method, and it has two important parameters:

- The variables to drop… (e.g. [‘Y1’, ‘Y2’])

- Whether to drop from the index ( axis=0) or the columns ( axis=1)

Now we’re ready to train a simple model. It’s a two-step process:Train a simple modelPython

| 12345 | # Initialize model instancemodel = LinearRegression() # Train the model on the datamodel.fit(X, y) |

First, we initialize a model instance. Think of this as a single “version” of the model. For example, if you wanted to train a separate model and compare them, you can initialize a separate instance (e.g. model_2 = LinearRegression()).

Then, we call the .fit() method and pass the input features (X) and target variable (y) as parameters.

And that’s it!

There are many cool mechanics working under the hood, but that’s basically all you need to create a basic model. In fact, you can get predictions and calculate the model’s R^2 like so:Calculate model R^2Python

| 123456 | from sklearn.metrics import r2_score # Get model R^2y_hat = model.predict(X)r2_score(y_hat, y)# 0.9072741541257009 |

Congratulations! You are now officially up and running Python for data science.

To be clear, the full data science process is much meatier…

- There’s more exploratory analysis, data cleaning, and feature engineering…

- You’ll want to try other algorithms…

- And you’ll need model training best practices such as train/test splitting, cross-validation, and hyperparamater tuning to prevent overfitting…

But this was a great start, and you’re well on your way to learning the rest!

Next Steps

As mentioned earlier, we’ve just scratched the surface. Even so, hopefully you’ve seen how easy it is to just get started.

And that’s the key!

Just get started, and don’t overthink it. Data science has a lot of moving pieces, so just take it one step at a time.

From here, there are three routes you can go for next steps. You’ll want to do all three of them eventually, but you can take them in any order.

Route #1: Get More Practice

Strike while the iron is hot, and keep practicing.

Route #2: Solidify Python Fundamentals

Route #3: Learn Essential Theory