History of Artificial Intelligence, it is not a new term or a new technology for researchers. This technology is much older than you could imagine. However, there are the myths of Mechanical Men in Ancient Greek and Egyptian Myths.

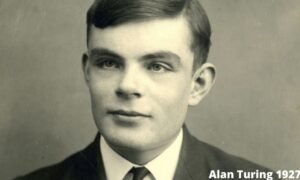

During World War II, Alan Turing a British computer scientist worked to crack the ‘Enigma’ code used by German forces to communicate messages securely. Alan Turing and his team developed the Bombe machine that was used to decrypt Enigma’s messages.

The Bombe Machines and Enigma laid foundations for Machine Learning. According to Turing, a machine that can converse with humans without involving humans knowing that it is a machine would win the “imitation game” and could be considered as ‘intelligent’.

The Birth of Artificial Intelligence

A model of Artificial Neurons was proposed by Warren McCulloch and Walter Pits in 1943. This model is now recognized as the first Artificial Intelligence work.

In 1949, Donald Hebb demonstrated an updating rule to modify the connection strength between neurons. This rule is now called Hebbian learning.

Alan Turing a British mathematician in 1950 published “Computing Machinery and Intelligence” which proposed a test. The test could check the ability to exhibit intelligent behavior similar to human intelligence, the test is called a Turing test.In 1955, Allen Newell and Herbert A. Simon created the “artificial intelligence program” for the first time in the history of artificial intelligence that was called “Logic Theorist”. The program had proved 38 of 52 Mathematics theorems, and find new and more refined proofs for some theorems.

Then an American Computer Scientist John McCarthy at the Dartmouth Conference in 1956 first adopted the term “Artificial Intelligence“. For the first time in the history of artificial intelligence and mankind, AI coined as an academic field. At the same time, high-level computers such as COBOL, LISP, FORTRAN were invented.

LISP was invented in 1958 by John McCarthy and it is the most popular & still favored programming language for artificial intelligence research.

Samuel invented the term “machine learning” back in 1959 when talking about programming the computer to play a game of chess better than the humans who wrote its program code.

The Golden year’s Early enthusiasm

Innovation in the space of artificial intelligence grew rapidly in the 1960s. The development of different programming languages, automatons and robots, research studies, and films that depicted artificially intelligent beings increased in recognition. This heavily highlighted the benefits of AI in the 2nd half of the 20th century.

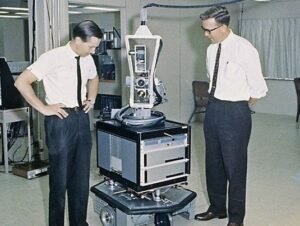

Unimate, an industrial robot created by George Devol in the 1950s, became the first robot to work during a general Motors assembly type in New Jersey the first robot in the history of artificial intelligence. Its responsibilities included transporting die castings in the assembly line and welding the components on to cars – a process deemed unsafe for humans.

Daniel Bobrow, a computer scientist created a STUDENT, an AI program written in LIPS that was used to solve algebra word problems. This AI program STUDENT was an early-stage milestone for AI natural language processing.

The Birth of First Chat-Bot

The German-American computer scientist Joseph Weizenbaum developed ELIZA, an interactive computer program that could communicate in English with a person. His goal was to demonstrate the communication between an artificially intelligent mind versus a human mind is possible.

The first general-purpose mobile robot “Shakey” also known as the “first electronic person” was developed by Charles Rosen with the help of 11 others in 1966.

Later in the 1960s, an early natural language computer program called SHRDLU was created by Terry Winograd, a professor of computer science.

The First Artificial Intelligence Winter

Unlike the trends in the 1960s, in the 1970s gave way to accelerate, focusing on robots and automatons. However, AI faced challenges such as government removed support for AI research.

In 1970, WABOT-1, the first anthropomorphic robot was built in Japan at Waseda University. This robot had moveable limbs, the ability to see and communicate.

With MYCIN, artificial intelligence discovers its way into the medical field for the first time in the history of artificial intelligence. The guru system produced by Ted Shortliffe at Stanford Faculty in 1972 is used for the treatment. Expert systems are computer programs that bundle the expertise for a specialist field making use of rules, formulas and a knowledge database. They’re used for analysis and treatment assistance in medicine.

James Lighthill applied mathematician, reported to the British Science Council, stating ‘in absolutely no part of the field have discoveries made so far produced the huge effect which was then promised’ that reduced assistance in AI research via the British government in 1973.

The eXpert CONfigurer (XCON) program, a rule-based expert system automatically selects the components based on the customer’s requirements, was developed at Carnegie Mellon University in 1978.

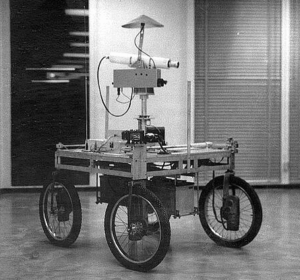

The Stanford Cart, a remote-controlled, tv equipped mobile robot was developed by then mechanical engineering grad student James L. Adams in 1961. Then in 1979, mechanical swivel or “slider,” which moved the tv camera from side-to-side, was added by Hans Moravec, then a Ph.D. student. The cart successfully crossed a chair filled the room with no human interference in approximately 5 hours, making it among probably the earliest examples of an autonomous automobile. Hence, the Stanford Cart is the first autonomous automobile in the history of artificial intelligence.

The Boom of Artificial Intelligence

The flourish of artificial intelligence continued in the 1980s. Despite excitement and advancements behind AI, warning surrounded an unavoidable “AI Winter,” a time of reduced funding and interest in AI.

In 1980, after almost a decade after the launch of WABOT-1, WABOT-2 was built at Waseda University in Japan. This inception on the WABOT allowed the humanoid to communicate with people, examine musical scores and play music on an electric organ.

In 1981 the Japanese Ministry of International Industry and Trade allocated $850 million to the 5th Generation Computer project, whose goal was developing computer systems that might converse, change languages, interpret photographs, and reasoning human-like reasoning.

Electric Dreams

It is released in 1984 the first fiction in the history of artificial intelligence, a fiction about a love triangle between a man, woman, and a personal computer.

At the union of the Advancement of Artificial Intelligence (AAAI) in 1984, Roger Schank & Marvin Minsky predicted the AI winter, the first instance where funding and interest for artificial intelligence analysis would decrease. Their prediction came to the case within 3 years.

In 1986 Mercedes-Benz built & released a driverless van equipped with sensors and cameras under the supervision of Ernst Dickmanns. It could drive up to 55 mph on a road without any other hurdles or human drivers and is considered as the first driverless van history of artificial intelligence.

The computer is provided a voice for the very first time. Terrence J. Sejnowski and Charles Rosenberg developed the ‘NETtalk’ program to speak by inputting phoneme chains and sample sentences. NETtalk could read terms and pronounce them correctly and can easily use what it has learned to words it doesn’t know. It is among the first artificial neural networks – applications that are provided with large datasets and are in a position to bring their own conclusions on this foundation. The structure of theirs and function are thereby like those of the human mind.

In 1988, the programmer and inventor Rollo Carpenter invented 2 chatbots, Jabberwacky and Cleverbot which were released in the 1990s. Jabberwacky was a perfect example of AI via a chatbot communicating with people and had features like simulating natural human chat in an entertaining, interesting, and humorous manner.

The Second Artificial Intelligence Winter

The second AI winter was from 1987 to 1993 again investors and several governments stopped funding in AI research due to the high cost but no effective results. The expert systems developed like XCON was very cost-effective.

Artificial Intelligence in the 1990s

In 1990 Rodney Brooks publishes “Elephants Don’t Play Chess” proposing a new strategy to AI – creating intelligent systems, particularly robots, out of the ground up and based on the physical interaction with the environment: The world is its own greatest model. The trick is sensing it often and appropriately enough.

In 1995 a computer scientist Richard Wallace developed a chatbot A.L.I.C.E (Artificial Linguistic Internet Computer Entity) inspired by ELIZA. the difference between A.L.I.C.E and ELIZA was the addition of natural language sample data collection.

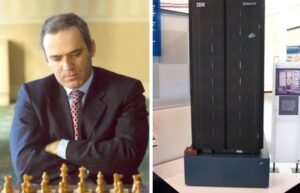

In 1997 the AI-chess computer ‘Deep Blue’ offered by IBM defeats the incumbent chess world champion, Garry Kasparov, for a competition. This is viewed as a historic success in an area earlier dominated by humans. Critics, however, look for fault with Deep Blue for coming out as the winner just by calculating all possible moves, instead of with cognitive intelligence.

In 1998 Dave Hampton and Caleb Chung create Furby, the first domestic robot or pet robot for children.

In 1999 with Furby, Sony launched AIBO (Artificial Intelligence RoBOt), a $2,000 robotic pet dog designed to “learn” by interacting with the environment, owners, along with other AIBOs. Its features provided the capability to recognize and respond to 100+ vocal commands and talk with its human owner.

Artificial Intelligence from 2000-2010

The new millennium started and after the fear of Y2K died AI continued trending upwards. As expected, more artificially intelligent beings were created and innovative media (film, specifically) regarding the idea of artificial intelligence and where it might be beheaded.

The Y2K problem

It is also known as the Year 2000 problem was a category of computer bugs related to the formatting and storage of electric calendar data starting on 01/01/2000. Provided that all web software programs and applications had been developed in the 1900s, some systems would have trouble adapting to the new year structure of 2000. Earlier, these automated systems just had to modify the last 2 digits of the year; today, all 4 digits had to be changed over – a task for technology and those that used it.

Professor Cynthia Breazeal created Kismet, a robot that could understand and simulate feelings with its face. It was organized like a man’s face with eyebrows, eyelids, lips, and eyes.

An artificially intelligent humanoid robot ‘ASIMO’ was released by Honda in 2000.

In 2002 Roomba released i-Robot, a robot vacuum that cleans while avoiding obstacles.

Sci-fi film directed by Alex Proyas “I,Robot” was released in 2014. Set in the year 2035, humanoid robots work humankind while one person is vehemently anti-robot, because of the end result of a private tragedy (determined by way of a robot)

In 2006 a computer science professor ‘Oren Etzioni’ along with 2 computer scientists Michele Banko, and Michael Cafarella coined the term “Machine Reading” defining it as an unsupervised autonomous understanding of a text.

In 2007 computer science professor Fei Fei Li and co-workers assembled ImageNet, a database of annotated pictures whose objective is aiding in object recognition software research.

The year 2009 when Google secretly developed a driverless car. By 2014, it passed Nevada’s self-driving test.

And again in 2009 ‘Stats Monkey’ a program that could write sports news without human intervention was developed by computer scientists at the Intelligent Information Laboratory at Northwestern University.

AI from 2010 to till date

The present decade has been immensely essential for AI innovation. From 2010 onward, artificial intelligence is now embedded in our daily existence. We use smartphones which have voice assistants and computers which have “intelligence”. AI is not a pipe dream and also has not been for a few times.

2010 Microsoft launched Kinect for Xbox 360, the first-ever gaming device that monitored human body movement using a 3D camera and infrared detection.

In 2010 ImageNet launched the ImageNet Large Scale Visual Recognition Challenge (ILSVCR), an annual AI object recognition competition.

Watson

IBM created a natural language question-answering computer WATSON in 2011. It won jeopardy, a quiz show, defeating two former Jeopardy champions, Ken Jennings and Brad Rutter in a televised game.

In 2011, Apple released Siri a virtual assistant on Apple iOS. Siri uses a natural language computer user interface to infer, answer, observe, as well as suggest things to its human user. It adapts to voice instructions and projects an “individualized experience” every user.

In 2012 Google researchers Jeff Dean and Andrew Ng trained a large neural network of 16,000 processors to recognize images of cats giving no back-ground information by showing it 10 million unlabeled images from YouTube.

In 2013 Never Ending Image Learner (NEIL) was released by a research team from Carnegie Mellon University, a semantic machine learning system that was able to compare and analyze image relationships.

2014 Microsoft released Cortana virtual assistance similar to Siri of Apple. Amazon also developed Amazon Alexa, home assistance also functions as personal assistants.

With the development of Artificial Intelligence in 2015 Elon Musk, Stephen Hawking, and Steve Wozniak along with 3000 others signed an open letter on the BAN of the development and use of autonomous weapons for war purposes.

Sophia

In 2016 a humanoid robot named Sophia is developed by Hanson Robotics. She is the first ‘Robot Citizen”. The major difference between Sophia and previous humanoids is her likeness to an actual human being, with image recognition she has the ability to see, make facial expressions and communicate through AI.

Sophia

Google released Google Home, an intelligent speaker that uses AI to act as a ‘personal assistant’ to assist users to remember details, book appointments, and search for info by voice.

Now in 2017, The Facebook Artificial Intelligence Research lab taught 2 “dialog agents” (chatbots) to speak with one another to discover how you can negotiate. However, since the chatbots conversed, they diverged from human language and invented their very own language to communicate with one another – showing artificial intelligence to a great degree.

In 2018 Google developed BERT, the first bidirectional, unsupervised language representation which could be used on a selection of natural language tasks by using transfer learning.

Alibaba language processing AI outscored human intellect at a Stanford reading & comprehension test. The Alibaba language processing scored “82.44 from 82.30 over a pair of 100,000 questions” – a narrow defeat, but however a defeat.

Samsung

Samsung launched Bixby in 2018, a virtual assistant. Bixby’s capabilities include Voice, where end-user can speak to and ask questions, suggestions, and recommendations; Vision, where Bixby’s “seeing” skill is built into your camera app and can easily check out what the end-user considers (i.e. item identification, search, buy, interpretation, landmark recognition); and Home, where Bixby uses app-based data to help utilize and work together with the end-user.

In 2019 OpenAI’s successfully trained a robot hand named Dactyl. It adopted the real-world environment in solving Rubik’s cube. It was entirely trained in the simulated environment however, it was still able to transfer the knowledge into a new situation.

Samsung in May 2019, developed a system that could transform facial images into a sequential video. The generative adversarial network (GAN) was used to produce deep fake videos only by taking one photograph as input. Researchers from Samsung used high-fidelity natural picture synthesis for allowing ML models to resonate with realistic human expression.

OpenAI, in February, released a base model called Generative Pre-Training (GPT) to produce synthetic text automatically. The firm gradually released the full version of the model, GPT 2, in November. On writing a handful of sentences, the model completely picked the context plus generated written text by itself. The unit was taught with more than 8 million web sites, resulting in making content that was hard to figure out whether it was a synthetic or generic text.

20xx: The near future is Intelligence

Artificial Intelligence is advancing at an unprecedented rate. This trend will continue swinging

Upward in the coming year a few technologies to keep our eyes on are

- ChatBots

- Natural Language Processing (NLP)

- Machine learning and automated machine learning.

- Autonomous vehicles

- Facial recognition

- Upgrading Privacy Policy

- Cloud Computing

- AI Chips