You’ve just completed your first machine learning course and you’re not sure where to begin applying your newfound knowledge. You could start small by playing with the Iris dataset or combing through the Titanic records (and this is probably the first thing that you should do). But what’s more fun than jumping straight in and competing with random strangers on the internet for money?

If you’re reading this article, you probably already know that Kaggle is a data science competition platform where enthusiasts compete in a range of machine learning topics, using structured (numerical and/or categorical data in tabular format) and unstructured data (e.g. text/images/audio), with the aim of securing prize money and a coveted Kaggle gold medal. Although you might find the idea of competing with others to be daunting, it’s important to remember that the goal is always to learn as much as possible and not to focus on results. Through this mindset, you will find that competitions become fun, rewarding, and even addictive.

First steps in choosing a competition

Find a competition that interests you.

This is the most important thing to consider when beginning a new competition. You want to give yourself about two months to tease through a problem and really become comfortable with the ins and outs of the data. This is a decent amount of time. Choosing a competition that you don’t have a huge interest in will lead to you becoming disinterested and giving up a few weeks in. Joining in early on in the timeline also gives you much more time to obtain background knowledge and increases the quality of your learning as you tackle stages of the problem alongside the rest of the community.

Focus on learning

If you find yourself frustrated with a competition and think it’s too difficult, focus on learning as much as possible and moving on. You’ll learn much more this way by staying engaged with the material. You may even find yourself making a breakthrough as you stop worrying about your position on the leaderboard!

Try to understand each line of the top scoring kernels

Ask yourself if there are some obvious ways you could look to improve their work. For example, is there a novel feature you could create that might boost their model’s score? Could you slightly tweak the learning rate they’ve used for better performance? Go after the low hanging fruit and don’t try to reinvent the wheel. This mindset will greatly accelerate your learning while ensuring that you don’t find yourself becoming frustrated.

Look for any strange stipulations in rules

This one’s not as important as the others but worth mentioning nonetheless. A recent competition contained a rule that stated the following:

[ your Submission] does not contain confidential information or trade secrets and is not the subject of a registered patent or pending patent application

A user in the forums stated that this stipulation would make the use of dropout illegal since this is a technique patented by Google!

Kernels and Discussion

The Kernels and Discussion tabs should be regularly checked over the course of a competition

Start by checking out some EDAs (Exploratory Data Analyses?, Exploratory Data Analysis’s?, Exploratory Data Analysii?) and gauging your interest level in the domain and topic. Think about any novel ideas that stick out to you as you browse other’s works in terms of suitable model types for the data, feature engineering, etc.

The “Welcome” post in the discussion tab provides great background reading

It never hurts to obtain domain knowledge in the area of the competition. This can help provide insight into how your models are performing and can greatly aid feature engineering. I usually spend a week or two at the start of a competition reading as much as possible to understand the problem space. To aid with this, most competition organisers create an onboarding post in the forums with links to important papers/articles in the domain. They may also provide tips on how to handle larger datasets and basic insights into the data. These threads are always worth checking out and looking back on as you gain more information about the problem at hand.

Exploratory Data Analysis

What should you focus on?

Your initial analysis of a dataset will vary depending upon the type of data that you’re examining. However, the concepts are generally similar across domains and the below information can be adapted to your specific area. To simplify things, let’s assume we’re looking at structured data. There are some basic questions to ask in any initial EDA:

- How is the target distributed?

- Is there any significant correlation between features?

- Are there any missing values in the data?

- How similar are the train and test data?

How is the target distributed?

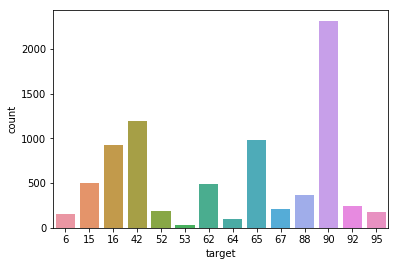

The very first thing to look out for in any dataset is the distribution of the classes. You’ll want to find out quickly if there is a class imbalance as this can have a significant effect on your models down the line. Particularly if one class is drowning out the information from others in training. There are a number of techniques to deal with class imbalance (e.g. SMOTE, ADASYN, manually removing samples, model parameters to handle imbalanced datasets) but first we want to find out if classes are unevenly represented in the data. A quick way of checking this is through the plotting library, seaborn, based on the popular matplotlib.

import seaborn as sns

sns.countplot(df['target'])

As we can see, class 90 is over-represented in our data. The aforementioned SMOTE technique and others may create a more balanced training dataset. In turn, this can lead to a model that better generalises to unseen data, where this imbalance may not exist.

Is there any significant correlation between features?

Calculating the Pearson correlation coefficient between features can offer some invaluable insights into features. Knowing whether features are correlated or not can allow for creative feature engineering or removal of unnecessary columns. For example, in the heatmap plot shown below, EXT_SOURCE_1 is the credit rating for a loan applicant as supplied by an external source. DAYS_BIRTH, the age of the applicant in days (a negative integer), is negatively correlated with EXT_SOURCE_1 . This might mean that the calculation of EXT_SOURCE_1 involves the age of the applicant. Generally, we want to avoid including a feature that can be linearly derived from another feature (this is known as linear dependence) as it provides redundant information to the model.

import seaborn as sns

import matplotlib.pyplot as plt

def correlation_map(df, columns, figsize=(15,10)):

correlation = (df.loc[:, columns]).corr()

fig, ax = plt.subplots(figsize = figsize)

sns.heatmap(correlation, annot = True, ax = ax)

Are there any missing values in the data?

You always want to ensure that you have a complete training dataset with as few missing values as possible. For instance, if your model finds a feature highly important but it turns out that a high number of rows in that feature are missing values, you could greatly improve your model’s performance by imputing the missing values. This can be done by inferring the value of the feature based on similar rows that do not contain an NaN . Another strategy (known as backfill) is to fill in a missing value with the next non-null value. The mean, median, or mode of the rest of the feature is also sometimes used to impute missing values. The pandas.DataFrame.fillna() method provides a few different options to handle this scenario and this Kaggle kernel is a useful resource to read through.

However, missing values do not always imply that the data was not recorded. Sometimes, it makes sense to include an NaN value for a feature that is not applicable in that individual row. For example, let’s say a loan application dataset with a binary target (whether the applicant was approved or not) includes a feature on whether the individual owns a car. If a given person does not own a car, then another feature for the date of registration of the car will contain an NaN value as there is feasibly no information to fill in here.

How similar are the train and test data?

The pandas DataFrame object includes a pandas.Dataframe.describe()method which provides statistics on each feature in the dataset such as the max, mean, standard deviation, 50th percentile value, etc. This method returns another DataFrame so you can add further information as you desire. For example, you could have another row that checks for the number of missing values in the column using the following function:

def describe_df(df):

stats_df = df.describe()

stats_df.append(pd.Series(df.isna().any(), name='nans'))

return stats_df

This is an extremely useful method and allows you to quickly check the similarity between features in train and test. But what if you had a single number value that gives you a good idea of just how close train and test are at a glance? This is where adversarial validation comes in. I know that sounds like a bit of a scary term but it’s a technique you’ll find astonishingly simple once you understand it. Adversarial validation involves the following steps:

- Concatenate both your train and test datasets into one large dataset

- Set the target feature of all train rows to a value of 0

- Fill in a value of 1 for the target feature in all test rows (you can probably see where this is going)

- Create stratified folds from the data (I like to use the sklearnimplementation)

- Fit a model like LightGBM to the training folds and validate on the validation fold

- Take the validation predictions across the entire dataset and compute area under the receiver operating characteristic curve (ROC AUC). I use this implementation to calculate the area.

- An area of 0.5 under the ROC curve means that the model cannot distinguish between the train and test rows and therefore the two datasets are similar. If the area is greater, there are some differences between train and test that the model can see so it would be worth your while to dig deeper into the data to ensure your model can predict well on test.

I found the following two kernels useful for getting to grips with this technique:

- https://www.kaggle.com/tunguz/adversarial-santander

- https://www.kaggle.com/pnussbaum/adversarial-cnn-of-ptp-for-vsb-power-v12

Why start with tree based models?

Figuring out the right model to start with is important and can be pretty confusing when you’re first starting out. Let’s again assume you’re working with structured data and you want to gain some insights into your data before you get stuck into modelling. I find that a LightGBM or XGBoost model is great for throwing at your data as soon as you enter a new competition. These are both tree-based boosting models that are highly explainable and easy to understand. Both offer functionality to plot their splits so it can be useful to create trees of max depth = 3 or so and view exactly which features the model splits on from the outset.

The lightgbm.Booster.feature_importance() method shows the most important features to the model in terms of number of splits that the model made on a specific feature (importance_type="split") or the amount of information gained by each split on a specific feature (importance_type="gain"). Looking at feature importances is particularly useful in anonymized datasets where you can take the top 5 or so features and infer what the features may be, knowing how important they are to the model. This can greatly aid feature engineering.

You will also find LightGBM in particular to be lightning quick (excuse the pun) to train even without the use of GPUs. Finally, great documentation exists for both models so a beginner should have no trouble picking up either.

Evaluation

You could have the best performing model in the competition and not know it without the use of a reliable evaluation method. It is vital that you understand the official evaluation metric in a competition prior to participation. Once you have a solid grasp on how your submissions will be evaluated, you should ensure that you use the official evaluation metric (or your own version of it if a suitable implementation is not easily available) in training and validation. A combination of this with a reliable validation set will avoid you burning through submissions and allow you to experiment quickly and often.

Further to this point, you want to set up your model evaluation in such a manner that you get a single number at the end. Looking at training loss vs. validation loss or a suite of metrics ranging from precision, recall, F1-score, AUROC, etc. can sometimes be useful in production but in competition, you want to be able to quickly glance at one number and say “this model is better than my previous model”. Again, this number should be the official metric. If it isn’t, you should have a good reason for it not to be.

If you follow the above advice, you’ll get to a point where you’re experimenting often enough that you’ll need a reliable method for keeping track of your results. I like to use a MongoDB instance running in a Dockercontainer to which I send my model parameters and validation score after each run through my evaluation script. I keep a seperate table (or collection as MongoDB likes to call them) for each model. When I’m done running through a few experiments, I dump the records to a local directory on my machine as a MongoDB .archive file and also as a .csv to quickly read through. Code for this can be found here. It should be noted that there are differing schools of thought on how to handle recording results and this is my preferred method but I’d love to hear how other data scientists approach it!

This blog was originally written by Chirag Chadha. He is a data scientist at UnitedHealth Group/Optum in Dublin, Ireland. You can reach him at his email (chadhac@tcd.ie), through his LinkedIn, or follow him on Kaggle and GitHub.