Naive Bayes is a powerful algorithm for predicting modeling. It is a supervised learning algorithm based on Bayes theorem and is used to solve classification problems. It is mainly used in text classification including a high-dimensional data set.

Naive Bayes Classifier is a simple and most effective classification algorithm in building the fast machine learning models that can make predictions quickly. It is a probabilistic classifier that means it predicts based on the probability of an object.

Popular examples of Naive Bayes Classifier

- Spam filtration

- Sentimental analysis

- Classifying articles

It comprised of two words ‘Naive’ and ‘Bayes’ that can be further described as:

- Naive

It is known as Naive since it assumes that the occurrence of a particular characteristic is independent of the occurrence of various other functions. Such as in case the fruit is identified on the bases of taste, shape, and color, then white, spherical, and fruit that is sweet is realized as an apple. Hence each characteristic separately contributes to recognize it’s an apple without depending on one another.

- Bayes

It is known as Bayes because it based on the principle of Bayes’ Theorem.

Bayes’ Theorem

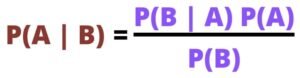

Bayes’ Theorem is also called as Bayes’ Rules or Bayes’ law that is used to determine the probability of a hypothesis with prior knowledge. It totally depends on the conditional probability. The formula for Bayes Theorem is:

Where,

- P (A | B) represents the Posterior probability. The Probability of hypothesis A on the observed event B.

- P (B | A) represents Likelihood probability. The Probability of the evidence when the probability of a hypothesis is true.

- P (A) represents the Prior Probability. The probability of hypothesis before observing the evidence.

- P (B) represents the Marginal Probability: Probability of Evidence.

The basic Naive Bayes’s assumptions are that each feature makes an Independent and Equal contribution to the outcome. The assumptions produced by Naive Bayes aren’t generally correct in real-world situations. In-fact, the independence presumption is not appropriate but typically works well in training. Applications of Naive Bayes Classifier

Working of Naive Bayes Algorithm

To understand the working of Naive Bayes Algorithm let us consider an example:

Let us consider there is a given training data set of weather and target variable “Playing Golf”. Now we will classify whether a boy will play Golf based on the weather conditions.

The given input Data Set is:

| Weather | Playing Golf |

| Sunny | No |

| Rainy | Yes |

| Overcast | Yes |

| Sunny | Yes |

| Overcast | Yes |

| Rainy | No |

| Sunny | Yes |

| Sunny | Yes |

| Rainy | No |

| Rainy | Yes |

| Overcast | Yes |

| Rainy | No |

| Overcast | Yes |

| Sunny | No |

Step 1 – Making of frequency tables using the input data set.

| Weather | Yes | No |

| Sunny | 3 | 2 |

| Overcast | 4 | 0 |

| Rainy | 2 | 3 |

| Total | 9 | 5 |

Step 2 – Now we have to make a likelihood table by calculating the possibilities of both weather conditions and playing golf.

| Weather | Yes | No | Probability |

| Sunny | 3 | 2 | 5/14 = 0.36 |

| Overcast | 4 | 0 | 4/14 = 0.29 |

| Rainy | 2 | 3 | 5/14 = 0.36 |

| Total | 9 | 5 | |

| Probability | 9/14 = 0.64 | 5/14 = 0.36 |

Step 3 – For every class, we calculate the posterior probability using the Naive Bayes equation.

Prediction: A boy will play golf if the weather is overcast. Is this prediction correct?

Solution:

- P (Yes | Overcast) = (P (Overcast | Yes) * P (Yes)) / P (Overcast)

- P (Overcast | Yes) = 4/9 = 0.44

- P(Yes) = 9/14 = 0.64

- P(Overcast) = 4/14 = 0.39

Now all the calculated values are kept in the above formula

- P (Yes | Overcast) = (0.44 * 0.64) / 0.39

- P (Yes | Overcast) = 0.722

Applications of Naive Bayes Classifier

- Real-Time Predictions

It is an eager learning classifier and is also fast, hence suited for real-time predictions.

- Multi-Class Predictions

It is also well known for its multi-class predictions feature. We can predict the probability of multiple classes of the target variables.

- Credit scoring

- Spam filtering and Sentiment analysis

It is used in text classifications

- Medical Data Classification

- Recommendation system

A Recommendation System is built by the classifier with the help of Collaborative Filtering. The system uses data mining and machine learning techniques to filter data that is not seen before and based on the data predicts whether a user is likely to appreciate a given resource or not.

Types of models in Naive Bayes Classifier

- Gaussian

This algorithm assumes that the continuous values corresponding to each characteristic are distributed based on the Gaussian distribution also known as Normal distribution.

The prior probability or likelihood of a predictor of the specified class is assumed to be Gaussian. Hence

conditional probability can be calculated as:

- Multinomial

The frequencies of the occurrence of particular events represented by feature vectors are created using multinomial distribution. This model is popularly used for document classification.

- Bernoulli

With this model, the inputs are described by the functions that are independent binary variables or Booleans. This’s also commonly used in document classification as Multinomial Naive Bayes.

Advantages of Naive Bayes Classifier

- Easy to implement compared to others

- Fast

- It works more efficiently if the independence assumption holds as compared to others

- This requires less input training data

- It can handle both discrete and continuous data

- Highly scalable

- It is used to make probabilistic predictions

- It works easily with the missing data

- Updating is easy when new data arrives

- It is best suited for text classification problems

Disadvantages of Naive Bayes Classifier

- Data scarcity

- There are chances of loss inaccuracy

- Zero frequency

- The strong assumptions about the features can be independent which is hardly true in real-life applications