HTML Interview Questions Introduction to html interview questions Are you looking for help preparing for an HTML interview? Then you are in the right article to have html interview questions. Knowing what to expect and having a good understanding of the basics of the language are keys to success. InRead more

HTML Interview Questions

Introduction to html interview questions

Are you looking for help preparing for an HTML interview? Then you are in the right article to have html interview questions. Knowing what to expect and having a good understanding of the basics of the language are keys to success. In this brief introduction to HTML, we’ll take a look at the fundamentals so you can ace your next HTML interview.

HTML, or HyperText Markup Language, is a programming language used in web development. It’s used to create webpages and applications, and it serves as the backbone of all websites. As you prepare for your upcoming HTML interview, it’s helpful to familiarize yourself with the basics of the language.

The basic structure of an HTML document is composed of two elements: the document head and the document body. The head contains information about the page, such as the title, meta tags, or scripts, while the body contains all of the visible content on a page, such as text and images.

HTML documents are built out of elements, which are identified by tags. Each element consists of an opening tag followed by some amount of content within it, and then a closing tag (or selfclosing tag in certain cases). These tags define how each element looks and behaves on a page. In addition to tags, elements also contain attributes that provide extra information about them, such as class names or link URLs.

It’s also important to know that elements can be nested inside each other, allowing you to create more complex structures with ease. For example, you could have one element wrapped around multiple other elements and it would still function as intended.

1.What is HTML?

HTML stands for HyperText Markup Language. HTML is the standard markup language used to create web pages and web applications. It is a combination of both text and graphical elements that together form the content of viewable documents. HTML includes tags made up of keywords surrounded by angle brackets, like <html>. The purpose of these tags is to indicate how the document should be interpreted by a web browser such as Chrome, Firefox, or Internet Explorer. HTML also contains instructions for a web browser on how to display images, text formatting, tables, etc. It also supports external file links, enabling developers to link in scripts (e.g JavaScript) or stylesheets (e.g CSS).

Note: It is one of the important HTML interview questions.

2.What are Attributes and how do you use them?

Attributes are pieces of additional information which can be attached to elements on a web page. They provide extra details about the element, such as its size, color, or other characteristics. Attributes are always specified within the opening tag of an HTML element.

For example, if you want to create a hyperlink using HTML, you could use the <a> tag with a “href” attribute specifying the URL:

<a href=”https://example.com”>Link Text</a>.

In this case, the “href” attribute is providing additional information about what should happen when someone clicks on that link – it should take them to the specified website.

Similarly in html interview questions you may be asked to describe attributes and how they are used for various elements – such as images and forms – so it’s important to understand how they work and what role they play in structuring your webpages correctly. For example, if you’re creating an image element then you’ll need to specify certain attributes like its width and height so that it can be displayed correctly on screen.

Note: It is one of the important HTML interview questions.

3.When are comments used in HTML?

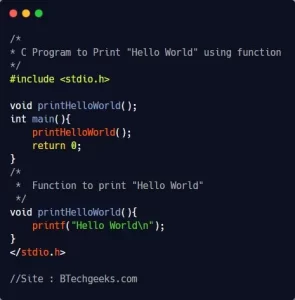

Comments in HTML are used to provide information or explain the code, but they will not be displayed on a web page when viewed in a browser. Comments can be helpful for developers and other users who are viewing or modifying the code by providing context or instructions. They generally start with ‘<!–‘ and end with ‘–>’. For example: <!– This is an HTML comment –>. Comments can help reduce errors during editing as well as make it easier for new developers to understand existing code faster by providing explanations of how things work within the HTML document.

Note: It is one of the important HTML interview questions.

4.Name some common lists that are used when designing a page.

- Navigation Menu List: This list contains links to the different pages on your website, and provides navigation to users.

- Header List: The header list covers all of the important information visitors will need when they visit a page, such as titles, taglines and logos.

- Footer List: The footer list contains important information about copyrights and other related terms of an organization or website’s use that must be included somewhere on the page for legal reasons.

- Form Fields List: This is used when creating HTML forms with multiple fields like contact forms or search boxes in which every field has separate input name, label and type attributes defined by HTML standards.

- Article List: For sites with multiple articles this can be useful for listing them in order to make it easier for a visitor to find one specific article from many articles published on the site.

- Images & Media Lists: Images are often added to webpages as part of their design, so a list containing their source, size & type should be included in case you want to later change them or remove any unnecessary ones from loading up each time someone visits your site.

- Typical Content Area Lists : Another type of general lists which helps keep content organized are typically seen above & below most websites headers & footers – these usually include items such as recent posts/articles featured images/videos links etc; aiming at keeping users updated with relevant content available on your website within seconds without having to scroll through it all manually.

5.What are the tags used to separate a section of texts?

The tags used to separate a section of texts are HTML tags, which can include <p> for paragraph, <h1>-<h6> for headings, <ul>-<ol> for ordered and unordered lists, and other elements such as <div>, <span>, and others. Additionally, there are attributes such as id or class that can be applied to any element to give the text a more detailed format. The use of stylesheet languages such as CSS or JavaScript can also be employed to customize the text’s appearance further.

Note: It is one of the important HTML interview questions.

6.What is the purpose of using alternative texts in images?

Alternative text (alt-text) is a short description of an image that can be added to HTML tags. Its primary purpose is to improve accessibility for people who are visually impaired, as some assistive technologies cannot access or interpret images. It also helps search engines index and rank images appropriately, providing better overall website visibility and optimization. Additionally, it serves as a brief textual alternative when an image cannot be viewed by the user due to technical issues such as slow network connection speed or incorrect configuration settings.

Note: It is one of the important HTML interview questions.

7.Why is a URL encoded in HTML?

A URL (Uniform Resource Locator) is a string of text that is used to represent the address of a web page or other resource on the internet. HTML, which stands for Hypertext Markup Language, is the language used to create websites and webpages. When encoding a URL in HTML, it helps ensure that all characters are displayed correctly when viewed in a web browser so that users can easily access the website or resource being referenced. An encoded URL also helps to protect against cross-site scripting attacks as malicious code may be hidden within an unencoded URL which could allow hackers to gain access to sensitive information from visitors accessing your webpage/website. Furthermore, encoded URLs are often easier for search engines to interpret and help you achieve better rankings in them.

Note: It is one of the important HTML interview questions.

8.What is the advantage of collapsing white space?

Collapsing white space has several advantages when it comes to HTML coding. The main advantage is that it allows developers to write code more concisely and efficiently. Additionally, collapsing white space eliminates the need for manually inserting unnecessary spaces and line breaks in the source code. This helps provide a neat and organized structure to HTML coding, which makes it easier to read and debug later. Finally, collapsing white space can significantly reduce the file size of web pages, helping them load faster which improves user experience.

9.What is the relationship between the border and rule attributes?

The border and rule attributes are both used to define a border or line around an HTML element. The ‘border’ attribute is typically used as shorthand for setting all of the individual border properties at once, including width, style, and color. The ‘rule’ attribute allows you to specify exactly what the border should look like using specific values for each property – width, style, and color – which can be specified individually. Both attributes provide similar functionality but with slightly different settings that result in a slightly different appearance of the resulting HTML element’s borders.

Note: It is one of the important HTML interview questions.

10.Is there any way to keep list elements straight in an HTML file?

Yes, there are various ways to keep list elements straight in an HTML file. This can be done using CSS styling options such as padding and margins, as well as making use of the HTML tags <ul> (unordered list) and <ol> (ordered list). Additionally, applying a style class to each list element can also help you organize your document in a more organized way.

11.How do you create a link that will connect to another web page when clicked?

Creating a link that connects to another web page when clicked is a relatively straightforward process. To do this, you need to use HTML’s <a> tag. The <a> tag allows you to specify the destination of the linked page by setting the “href” attribute equal to the address of the other web page. You can also set an optional “target” attribute so that when the user clicks on your link, it will open in a new window or tab when they visit its destination. Here is an example:

<a href=”https://www.examplewebsite/html-interview-questions” target=”_blank”>html interview questions</a>.

This code sets up a link where if a user clicks on “html interview questions,” it will take them to https://www.examplewebsite/html-interview-questions and open in a new tab or window (depending on their browser settings).

Note: It is one of the important HTML interview questions.

12.What are the limits of the text field size?

The limits of the text field size depend on the programming language and web framework used. For most HTML5-compatible browsers, the maximum length of a text field is defined as 2^53 – 1 characters or about 9 quadrillion characters. This limit may also be determined by other factors such as the maximum string length allowed in a particular language or framework – for example, some languages and frameworks may have its own set limit which are larger (or smaller) than this one. Generally speaking, it’s best to set reasonable limits on any text field input depending on what you expect your users to be entering.

13.What are the new FORM elements which are available in HTML5?

HTML 5 supports a range of new FORM elements which offer extra features, usability and flexibility to users. These include:

- The <input> element – this allows the user to input textual data including form fields and passwords as well as other content such as images, files etc.

- <datalist> element – this allows the user to select an item from a list of pre-defined values

- <meter> element – this displays a numeric value in graphical format (such as a bar graph)

- <progress> element – this shows how far along a task has progressed and can be used for showing the loading progress of an application or website

- <keygen> element – helps with secure authentication by generating public-private key pairs

- The range input type – this enables users to select numerical values between two specified numbers or within certain ranges

- The color picker control – gives users the ability to choose colors froma predefined palatte or enter their own RGB values

- Date/Time inputs – this makes it easier for users when entering date or time information into forms.

Note: It is one of the important HTML interview questions.

14.How many types of CSS can be included in HTML?

There are three types of CSS that can be included in HTML: internal, external, and inline.

Internal CSS is where a style sheet is defined within the <style> tag within an HTML document. This type of styling applies to all the elements on the page it is used in. The benefit of using internal CSS is that it allows for more specific control over various elements on the page without affecting other pages or websites.

External CSS takes styling information from an external file and applies it to whatever page uses that file. By using external stylesheets, developers can separate content from design by keeping their styling information outside of an HTML document while still applying it to any webpages calling upon its use. External stylesheets are generally easier to maintain than Internal or Inline methods as they allow for easy updating across multiple pages at once.

Inline CSS involves writing specific rules for each element directly into their respective tags via style attributes (e.g., style=”color: #ff0000″). This method should generally be avoided as it requires further code bloat and goes against recommended best practices like separation of concerns (content vs presentation). Additionally, any changes made with inline styles must be applied manually to every element, which makes maintenance more difficult than with the other methods listed above.

15.How can you apply JavaScript to a web page?

JavaScript can be applied to a web page in the form of scripts – snippets of code written in JavaScript. These scripts are added to an HTML document using the <script> tag, either inline or by referencing an external JavaScript file with a src attribute. The scripts typically add dynamic elements and behaviors to the page, such as displaying interactive content, validating forms, animating elements on mouse hover, triggering AJAX requests for retrieving data from server-side databases. To ensure compatibility across browsers, it is essential to use feature detection methods and polyfills when writing JavaScript for a website.

Note: It is one of the important HTML interview questions.

All the above HTML interview questions marked as noted are very important, but it will be more helpful to clear the interview to learn all the above HTML interview questions.

Challenges You Might Encounter When Working With HTML

When it comes to designing webpages, HTML is one of the most widely used programming languages. It provides powerful and userfriendly tools for creating appealing structures and layouts for websites. However, writing HTML can often be a challenge. In this blog, we’ll take a look at some of the most common challenges you might encounter when working with HTML code.

Invalid Syntax:

One of the biggest challenges when writing HTML code is making sure your syntax is valid. This means that all of your HTML tags must be correctly formed and spelled correctly in order to work properly. If there are any errors in your syntax, then the webpage won’t display correctly or won’t even load at all. So if you want your websites to look professional and function correctly, doublecheck your syntax for any errors before you publish it online. Practice html interview questions related to Invalid Syntax.

Poor Layout:

Another challenge when writing HTML is creating an appealing layout for your website. It’s important to make sure that your pages have an organized structure and pleasing design so that they don’t look cluttered or overwhelming to visitors. You should also be mindful of using plenty of white space between elements on a page so that there is room for visuals and text without an overcrowded feel.

Cross Browser Compatibility Issues:

When building a website, it needs to be compatible with different web browsers such as Chrome, Firefox, Safari and Internet Explorer. This compatibility ensures that everyone can view the website easily no matter which browser they use. Failure to test against all browsers can result in unexpected problems such as missing images or misalignments on certain browsers so always make sure to thoroughly test against different browsers before going live with a site

Conclusion

Are you looking to land a job in HTML development? It can be stressful to learn HTML interview questions, so it’s important to do your research and come prepared. This article discussed the various types of web development roles and the different skills that are typically assessed in an HTML interview. We also discussed the importance of mock HTML interview questions and provided research tips to help you better prepare for your html interview questions.

When it comes to HTML interviews, employers will often ask a variety of html interview questions related to coding, design, problem solving, and more. It’s important to understand the differences between frontend and backend development roles, as well as junior, midlevel and senior positions. Make sure you familiarize yourself with the various technologies that may be used in each role. You should also know what type of coding languages are necessary for each role so you can adequately explain why you’re qualified for the job.

It’s important to do your own research before answering HTML interview questions so that you have an understanding of the company’s specific products and services. Additionally, practicing mock HTML interview questions is a great way to become comfortable with answering HTML interview questions smoothly and accurately in a timely manner. Engaging with someone who knows the interviewing process from both sides—being asked HTML interview questions as well as asking them—can be invaluable when preparing for an HTML interview.

In conclusion, doing your due diligence prior to any HTML interview will greatly increase your chances of success by helping establish credibility during the process. Researching various web development roles and brushing up on skills like coding languages can make all the difference when you walk into an interviewer’s office! Practicing mock HTML interview questions through sites like Interview Cafe or html interview questions World could prove invaluable when

We hope these HTML interview questions will help you in your interview and make you feel confident in front of your interviewer with the help of these html interview questions.

See less

Top 30+ Spark Interview Questions Apache Spark is an open-source, lightning-quick computation platform based on Hadoop and MapReduce. It supports a variety of computational approaches for rapid and efficient processing. Spark is recognized for its in-memory cluster computing, which is the priRead more

Top 30+ Spark Interview Questions

Apache Spark is an open-source, lightning-quick computation platform based on Hadoop and MapReduce. It supports a variety of computational approaches for rapid and efficient processing. Spark is recognized for its in-memory cluster computing, which is the primary factor in enhancing the processing speed of Spark applications. Matei Zaharia developed Spark as a Hadoop subproject at UC Berkeley’s AMPLab in 2009. It was later open-sourced in 2010 under the BSD License and contributed to the Apache Software Foundation in 2013. Spark rose to the top of the Apache Foundation’s project list beginning in 2014.

In the ever-changing field of data processing and analytics, knowing Apache Spark is an essential skill for individuals wishing to flourish in big data technology. Whether you’re preparing for your first Spark interview or trying to further your career, a thorough grasp of Spark interview questions is critical to success.

Starting a Spark interview may be both exciting and difficult. Employers are keen to identify people who understand Spark’s design, programming paradigms, and seamless interaction with a variety of data sources. This thorough book is intended to provide you with the information and confidence necessary to succeed in Spark interviews.

Our handpicked Spark interview questions cover the framework’s breadth and complexity. From basic notions to complex optimization methodologies, we’ve accumulated an extensive list to guarantee you’re ready for every interview circumstance. So, brace up as we delve deep into the realm of Spark interview questions, providing you with the knowledge you need to flourish in your next professional meeting.

Here we have compiled a list of the top Apache Spark interview questions. These will help you gauge your Apache Spark preparation for cracking that upcoming interview. Do you think you can get the answers right? Well, you’ll only know once you’ve gone through it!

Question: Can you explain the key features of Apache Spark?

Answer:

Question: What advantages does Spark offer over Hadoop MapReduce?

Answer:

Question: Please explain the concept of RDD (Resilient Distributed Dataset). Also, state how you can create RDDs in Apache Spark.

Answer: An RDD or Resilient Distribution Dataset is a fault-tolerant collection of operational elements that are capable to run in parallel. Any partitioned data in an RDD is distributed and immutable.

Fundamentally, RDDs are portions of data that are stored in the memory distributed over many nodes. These RDDs are lazily evaluated in Spark, which is the main factor contributing to the hastier speed achieved by Apache Spark. RDDs are of two types:

There are two ways of creating an RDD in Apache Spark:

method val DataArray = Array(22,24,46,81,101) val DataRDD = sc.parallelize(DataArray)

Question: What are the various functions of Spark Core?

Answer: Spark Core acts as the base engine for large-scale parallel and distributed data processing. It is the distributed execution engine used in conjunction with the Java, Python, and Scala APIs that offer a platform for distributed ETL (Extract, Transform, Load) application development.

Various functions of Spark Core are:

Furthermore, additional libraries built on top of the Spark Core allow it to diverse workloads for machine learning, streaming, and SQL query processing.

Question: Please enumerate the various components of the Spark Ecosystem.

Answer:

Question: Is there any API available for implementing graphs in Spark?

Answer: GraphX is the API used for implementing graphs and graph-parallel computing in Apache Spark. It extends the Spark RDD with a Resilient Distributed Property Graph. It is a directed multi-graph that can have several edges in parallel.

Each edge and vertex of the Resilient Distributed Property Graph has user-defined properties associated with it. The parallel edges allow for multiple relationships between the same vertices.

In order to support graph computation, GraphX exposes a set of fundamental operators, such as joinVertices, mapReduceTriplets, and subgraph, and an optimized variant of the Pregel API.

The GraphX component also includes an increasing collection of graph algorithms and builders for simplifying graph analytics tasks.

Question: Tell us how will you implement SQL in Spark?

Answer: Spark SQL modules help in integrating relational processing with Spark’s functional programming API. It supports querying data via SQL or HiveQL (Hive Query Language).

Also, Spark SQL supports a galore of data sources and allows for weaving SQL queries with code transformations. DataFrame API, Data Source API, Interpreter & Optimizer, and SQL Service are the four libraries contained by the Spark SQL.

Question: What do you understand by the Parquet file?

Answer: Parquet is a columnar format that is supported by several data processing systems. With it, Spark SQL performs both read as well as write operations. Having columnar storage has the following advantages:

Question: Can you explain how you can use Apache Spark along with Hadoop?

Answer: Having compatibility with Hadoop is one of the leading advantages of Apache Spark. The duo makes up for a powerful tech pair. Using Apache Spark and Hadoop allows for making use of Spark’s unparalleled processing power in line with the best of Hadoop’s HDFS and YARN abilities.

Following are the ways of using Hadoop Components with Apache Spark:

Question: Name various types of Cluster Managers in Spark.

Answer:

Question: Is it possible to use Apache Spark for accessing and analyzing data stored in Cassandra databases?

Answer: Yes, it is possible to use Apache Spark for accessing as well as analyzing data stored in Cassandra databases using the Spark Cassandra Connector. It needs to be added to the Spark project during which a Spark executor talks to a local Cassandra node and will query only local data.

Connecting Cassandra with Apache Spark allows making queries faster by means of reducing the usage of the network for sending data between Spark executors and Cassandra nodes.

Question: What do you mean by the worker node?

Answer: Any node that is capable of running the code in a cluster can be said to be a worker node. The driver program needs to listen for incoming connections and then accept the same from its executors. Additionally, the driver program must be network addressable from the worker nodes.

A worker node is basically a slave node. The master node assigns work that the worker node then performs. Worker nodes process data stored on the node and report the resources to the master node. The master node schedule tasks based on resource availability.

Question: Please explain the sparse vector in Spark.

Answer: A sparse vector is used for storing non-zero entries for saving space. It has two parallel arrays:

An example of a sparse vector is as follows:

Vectors.sparse(7,Array(0,1,2,3,4,5,6),Array(1650d,50000d,800d,3.0,3.0,2009,95054))

Question: How will you connect Apache Spark with Apache Mesos?

Answer: Step by step procedure for connecting Apache Spark with Apache Mesos is:

Question: Can you explain how to minimize data transfers while working with Spark?

Answer: Minimizing data transfers as well as avoiding shuffling helps in writing Spark programs capable of running reliably and fast. Several ways for minimizing data transfers while working with Apache Spark are:

Question: What are broadcast variables in Apache Spark? Why do we need them?

Answer: Rather than shipping a copy of a variable with tasks, a broadcast variable helps in keeping a read-only cached version of the variable on each machine.

Broadcast variables are also used to provide every node with a copy of a large input dataset. Apache Spark tries to distribute broadcast variables by using effectual broadcast algorithms for reducing communication costs.

Using broadcast variables eradicates the need of shipping copies of a variable for each task. Hence, data can be processed quickly. Compared to an RDD lookup(), broadcast variables assist in storing a lookup table inside the memory that enhances retrieval efficiency.

Question: Please provide an explanation on DStream in Spark.

Answer: DStream is a contraction for Discretized Stream. It is the basic abstraction offered by Spark Streaming and is a continuous stream of data. DStream is received from either a processed data stream generated by transforming the input stream or directly from a data source.

A DStream is represented by a continuous series of RDDs, where each RDD contains data from a certain interval. An operation applied to a DStream is analogous to applying the same operation on the underlying RDDs. A DStream has two operations:

It is possible to create DStream from various sources, including Apache Kafka, Apache Flume, and HDFS. Also, Spark Streaming provides support for several DStream transformations.

Question: Does Apache Spark provide checkpoints?

Answer: Yes, Apache Spark provides checkpoints. They allow for a program to run all around the clock in addition to making it resilient towards failures not related to application logic. Lineage graphs are used for recovering RDDs from a failure.

Apache Spark comes with an API for adding and managing checkpoints. The user then decides which data to the checkpoint. Checkpoints are preferred over lineage graphs when the latter are long and have wider dependencies.

Question: What are the different levels of persistence in Spark?

Answer: Although the intermediary data from different shuffle operations automatically persists in Spark, it is recommended to use the persist () method on the RDD if the data is to be reused.

Apache Spark features several persistence levels for storing the RDDs on disk, memory, or a combination of the two with distinct replication levels. These various persistence levels are:

Question: Can you list down the limitations of using Apache Spark?

Answer:

Question: Define Apache Spark?

Answer: Apache Spark is an easy to use, highly flexible and fast processing framework which has an advanced engine that supports the cyclic data flow and in-memory computing process. It can run as a standalone in Cloud and Hadoop, providing access to varied data sources like Cassandra, HDFS, HBase, and various others.

Question: What is the main purpose of the Spark Engine?

Answer: The main purpose of the Spark Engine is to schedule, monitor, and distribute the data application along with the cluster.

Question: Define Partitions in Apache Spark?

Answer: Partitions in Apache Spark is meant to split the data in MapReduce by making it smaller, relevant, and more logical division of the data. It is a process that helps in deriving the logical units of data so that the speedy pace can be applied for data processing. Apache Spark is partitioned in Resilient Distribution Datasets (RDD).

Question: What are the main operations of RDD?

Answer: There are two main operations of RDD which includes:

Question: Define Transformations in Spark?

Answer: Transformations are the functions that are applied to RDD that helps in creating another RDD. Transformation does not occur until action takes place. The examples of transformation are Map () and filer().

Question: What is the function of the Map ()?

Answer: The function of the Map () is to repeat over every line in the RDD and, after that, split them into new RDD.

Question: What is the function of filer()?

Answer: The function of filer() is to develop a new RDD by selecting the various elements from the existing RDD, which passes the function argument.

Question: What are the Actions in Spark?

Answer: Actions in Spark helps in bringing back the data from an RDD to the local machine. It includes various RDD operations that give out non-RDD values. The actions in Sparks include functions such as reduce() and take().

Question: What is the difference between reducing () and take() function?

Answer: Reduce() function is an action that is applied repeatedly until the one value is left in the last, while the take() function is an action that takes into consideration all the values from an RDD to the local node.

Question: What are the similarities and differences between coalesce () and repartition () in Map Reduce?

Answer: The similarity is that both Coalesce () and Repartition () in Map Reduce are used to modify the number of partitions in an RDD. The difference between them is that Coalesce () is a part of repartition(), which shuffles using Coalesce(). This helps repartition() to give results in a specific number of partitions with the whole data getting distributed by application of various kinds of hash practitioners.

Question: Define YARN in Spark?

Answer: YARN in Spark acts as a central resource management platform that helps in delivering scalable operations throughout the cluster and performs the function of a distributed container manager.

Question: Define PageRank in Spark? Give an example?

Answer: PageRank in Spark is an algorithm in Graphix which measures each vertex in the graph. For example, if a person on Facebook, Instagram, or any other social media platform has a huge number of followers than his/her page will be ranked higher.

Question: What is Sliding Window in Spark? Give an example?

Answer: A Sliding Window in Spark is used to specify each batch of Spark streaming that has to be processed. For example, you can specifically set the batch intervals and several batches that you want to process through Spark streaming.

Question: What are the benefits of Sliding Window operations?

Answer: Sliding Window operations have the following benefits:

Question: Define RDD Lineage?

Answer: RDD Lineage is a process of reconstructing the lost data partitions because Spark cannot support the data replication process in its memory. It helps in recalling the method used for building other datasets.

Question: What is a Spark Driver?

Answer: Spark Driver is referred to as the program which runs on the master node of the machine and helps in declaring the transformation and action on the data RDDs. It helps in creating SparkContext connected with the given Spark Master and delivers RDD graphs to Masters in the case where only the cluster manager runs.

Question: What kinds of file systems are supported by Spark?

Answer: Spark supports three kinds of file systems, which include the following:

Question: Define Spark Executor?

Answer: Spark Executor supports the SparkContext connecting with the cluster manager through nodes in the cluster. It runs the computation and data storing process on the worker node.

Question: Can we run Apache Spark on the Apache Mesos?

Answer: Yes, we can run Apache Spark on the Apache Mesos by using the hardware clusters that are managed by Mesos.

Question: Can we trigger automated clean-ups in Spark?

Answer: Yes, we can trigger automated clean-ups in Spark to handle the accumulated metadata. It can be done by setting the parameters, namely, “spark.cleaner.ttl.”

Question: What is another method than “Spark.cleaner.ttl” to trigger automated clean-ups in Spark?

Answer: Another method than “Spark.clener.ttl” to trigger automated clean-ups in Spark is by dividing the long-running jobs into different batches and writing the intermediary results on the disk.

Question: What is the role of Akka in Spark?

Answer: Akka in Spark helps in the scheduling process. It helps the workers and masters to send and receive messages for workers for tasks and master requests for registering.

Question: Define SchemaRDD in Apache Spark RDD?

Answer: SchemmaRDD is an RDD that carries various row objects such as wrappers around the basic string or integer arrays along with schema information about types of data in each column. It is now renamed as DataFrame API.

Question: Why is SchemaRDD designed?

Answer: SchemaRDD is designed to make it easier for the developers for code debugging and unit testing on the SparkSQL core module.

Question: What is the basic difference between Spark SQL, HQL, and SQL?

Answer: Spark SQL supports SQL and Hiver Query language without changing any syntax. We can join SQL and HQL table with the Spark SQL.

Conclusion

Our voyage through the world of Apache Spark interview questions has been nothing short of insightful. As you begin on your professional journey, equipped with the knowledge obtained from this thorough book, the power of Apache Spark is set to serve as your career catalyst.

By digging into the depths of Apache Spark’s architecture, programming paradigm, and optimization approaches, you’ve provided yourself with the tools to traverse the hurdles of Spark interviews. Apache Spark’s agility in managing large datasets and providing seamless data processing across several sources highlights its importance in the ever-changing environment of big data technology.

In the competitive environment of data engineering and analytics, a thorough grasp of Apache Spark is more than an advantage; it is a defining element. As you prepare for interviews and professional interactions, remember that Apache Spark is more than just a framework; it is a dynamic force pushing innovation in the field of distributed computing.

So, whether you’re a seasoned professional looking to expand your knowledge or a beginner to the world of Spark interviews, the knowledge you get from our investigation will certainly move you forward. Here’s to understanding the Apache Spark interview landscape and seizing the opportunity it presents on your professional journey.

That completes the list of the 50 Top Spark interview questions. Going through these questions will allow you to check your Spark knowledge as well as help prepare for an upcoming Apache Spark interview.

See less